Forward-looking: At Nvidia's GTC 2024 conference on Monday, the company unveiled its Blackwell GPU platform that it says is designed for generative AI processing. The next-gen lineup includes the B200 GPU and GB200 Grace "superchip" that offer all the grunt needed by LLM inference workloads while substantially reducing energy consumption.

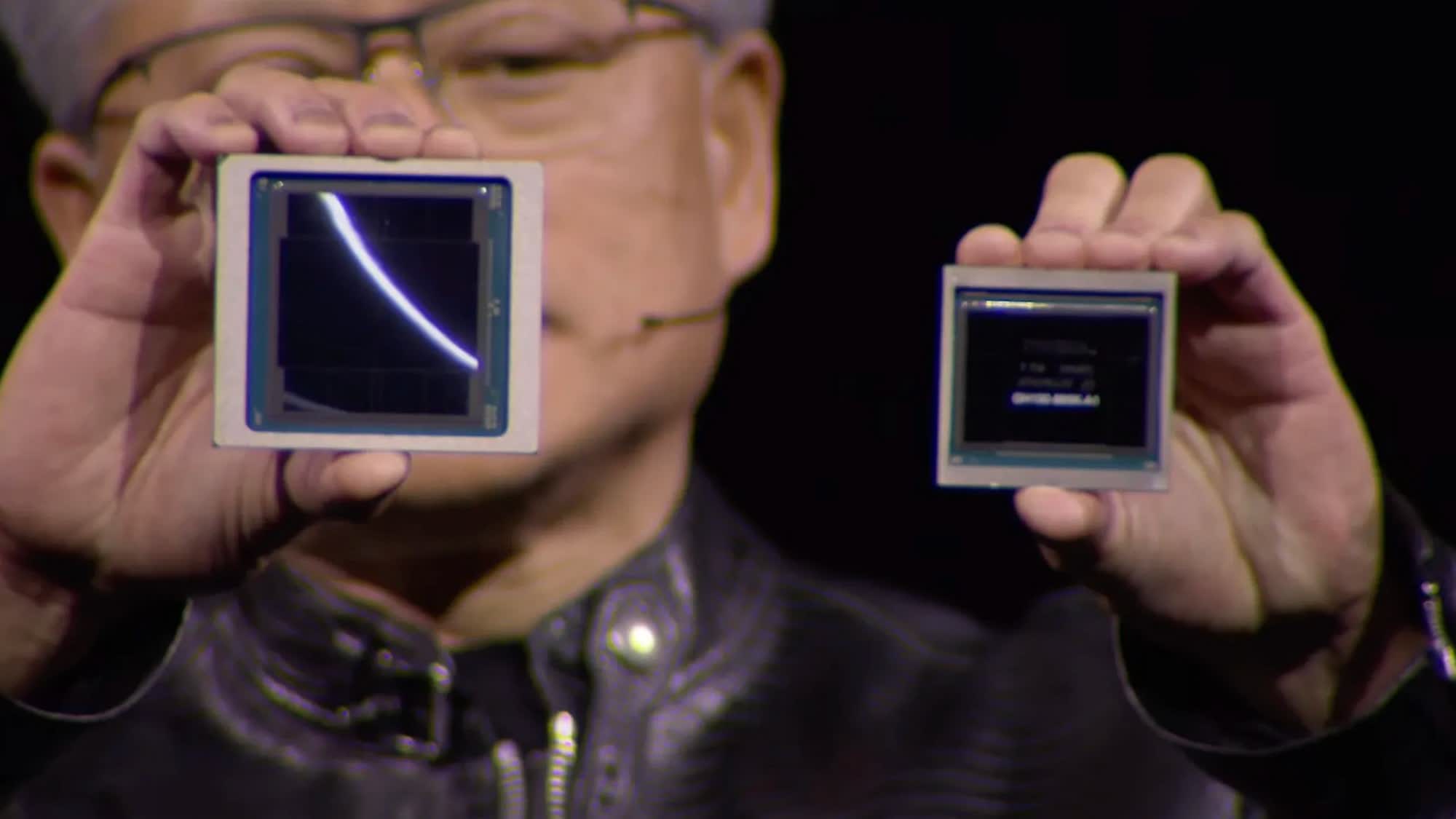

The new Nvidia B200 GPU packs 208 billion transistors and offers up to 20 petaflops of FP4 performance. It also includes a fast second-gen transformer engine featuring FP8 precision. The GB200 Grace combines two of these B200 chips with an Nvidia Grace CPU and connects them over an NVLink chip-to-chip (C2C) interface that delivers 900 GB/s of bidirectional bandwidth.

The company claims that the new accelerators would aid breakthroughs in data processing, engineering simulation, electronics design, automation, computer-aided drug design, and quantum computing.

According to Nvidia, just 2,000 Blackwell GPUs can train a 1.8 trllion parameter LLM while consuming just four megawatts of power, whereas it would have earlier taken 8,000 Hopper GPUs and 15 megawatts to complete the same task.

The company also claims that on a GPT-3 LLM benchmark with 175 billion parameters, the GB200 offers a 7x performance uplift over an H100 while offering 4x faster training performance. The new chips can potentially reduce operating costs and energy consumption by up to 25x.

Also read: Not just the hardware: How deep is Nvidia's software moat?

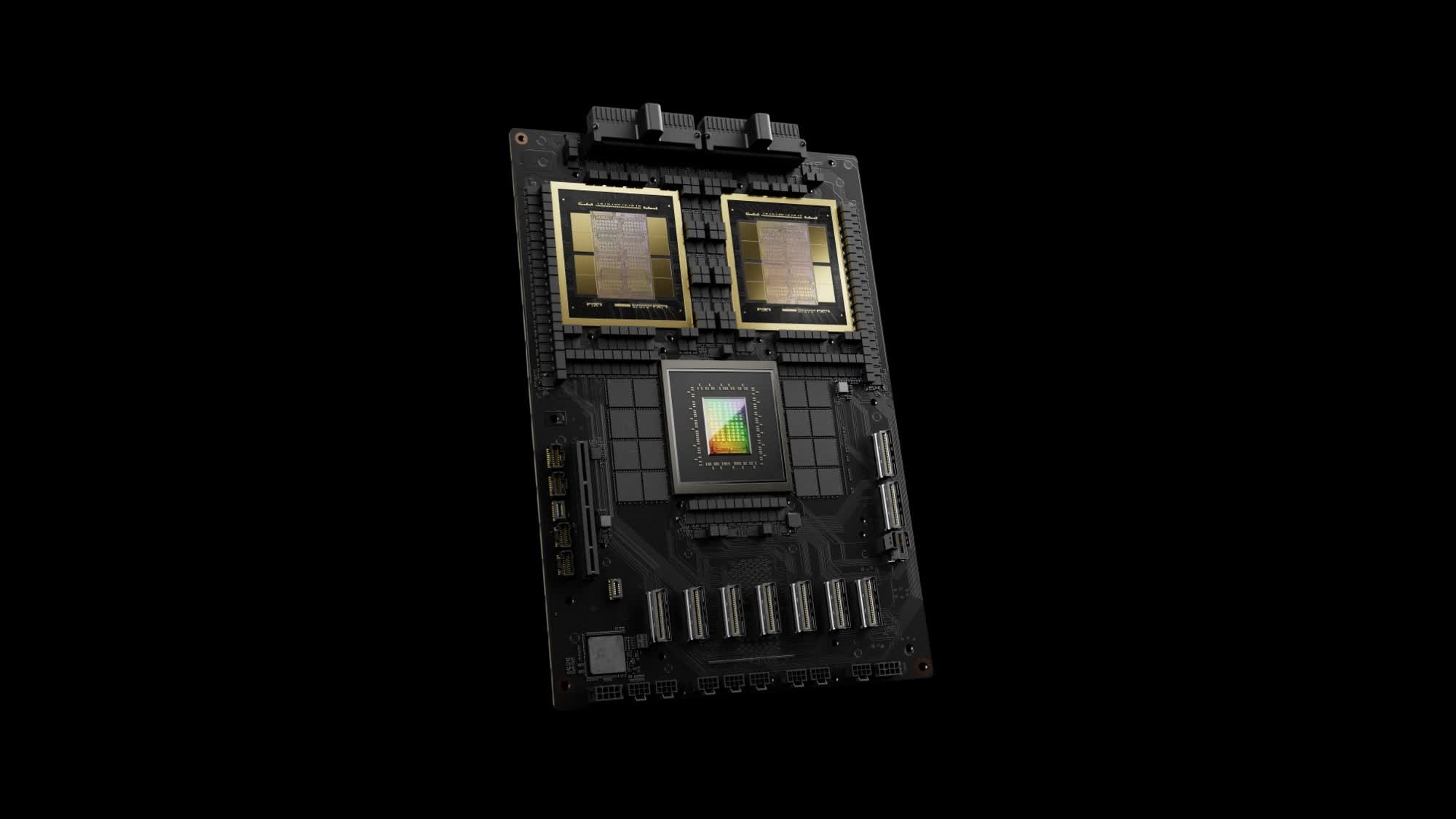

Alongside the individual chips, Nvidia also unveiled a multi-node, liquid-cooled, rack-scale system called the GB200 NVL72 for compute intensive workloads. It combines 36 Grace Blackwell Superchips, which include 72 Blackwell GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink.

The system comes with 30TB of fast memory and offers 1.4 exaflops of AI performance for the newest DGX SuperPOD. Nvidia claims that it offers 30 times the performance of an H100 system in resource-intensive applications like the 1.8T parameter GPT-MoE.

Many organizations and enterprises are expected to adopt Blackwell, and the list includes the virtual who's who of Silicon Valley. Among the biggest US tech companies set to deploy the new GPUs include Amazon Web Services (AWS), Dell, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI, among others.

Unfortunately for gaming enthusiasts, Nvidia CEO Jensen Huang did not reveal or hinted anything about the Blackwell gaming GPUs that are expected to be launched later this year or in early 2025.

The lineup is expected to be led by the GeForce RTX 5090 powered by the GB202 GPU, while the RTX 5080 will be underpinned by the GB203.