Forward-looking: For computer science students, generative AI isn't just the future – it's the present. These smart language models are already reshaping how the next generation of programmers learns to code, with teachers giving their approach a whole new spin.

A report by IEEE Spectrum has shown how generative AI is transforming academia. Students are using AI assistants like ChatGPT to grasp thorny concepts, summarize dense research papers, brainstorm ways to approach coding challenges, and prototype new ideas. Essentially, AI has become the ultimate study buddy for CS majors.

Professors can't ignore the AI wave either. They're experimenting with how to fold generative AI into their curricula while still ensuring students master core programming skills. It's a delicate balancing act as the technology rapidly evolves. "Given that large language models are evolving rapidly, we are still learning how to do this," Wei Tsang Ooi, an associate professor at the National University of Singapore, told the magazine.

One major shift is putting less emphasis on code syntax and more on higher-level problem-solving abilities. That's because with an AI assistant available, the hard part isn't writing code from scratch anymore. It's properly testing that code for bugs, breaking down large problems into smaller steps the AI can handle, and knitting together those steps into a complete solution.

Ooi notes that other crucial aspects of software design are the intangibles, such as identifying the correct problem to address and exploring potential solutions. He suggests that students should devote more time to optimizing software, considering ethical implications, and enhancing user-friendliness, rather than concentrating solely on coding syntax.

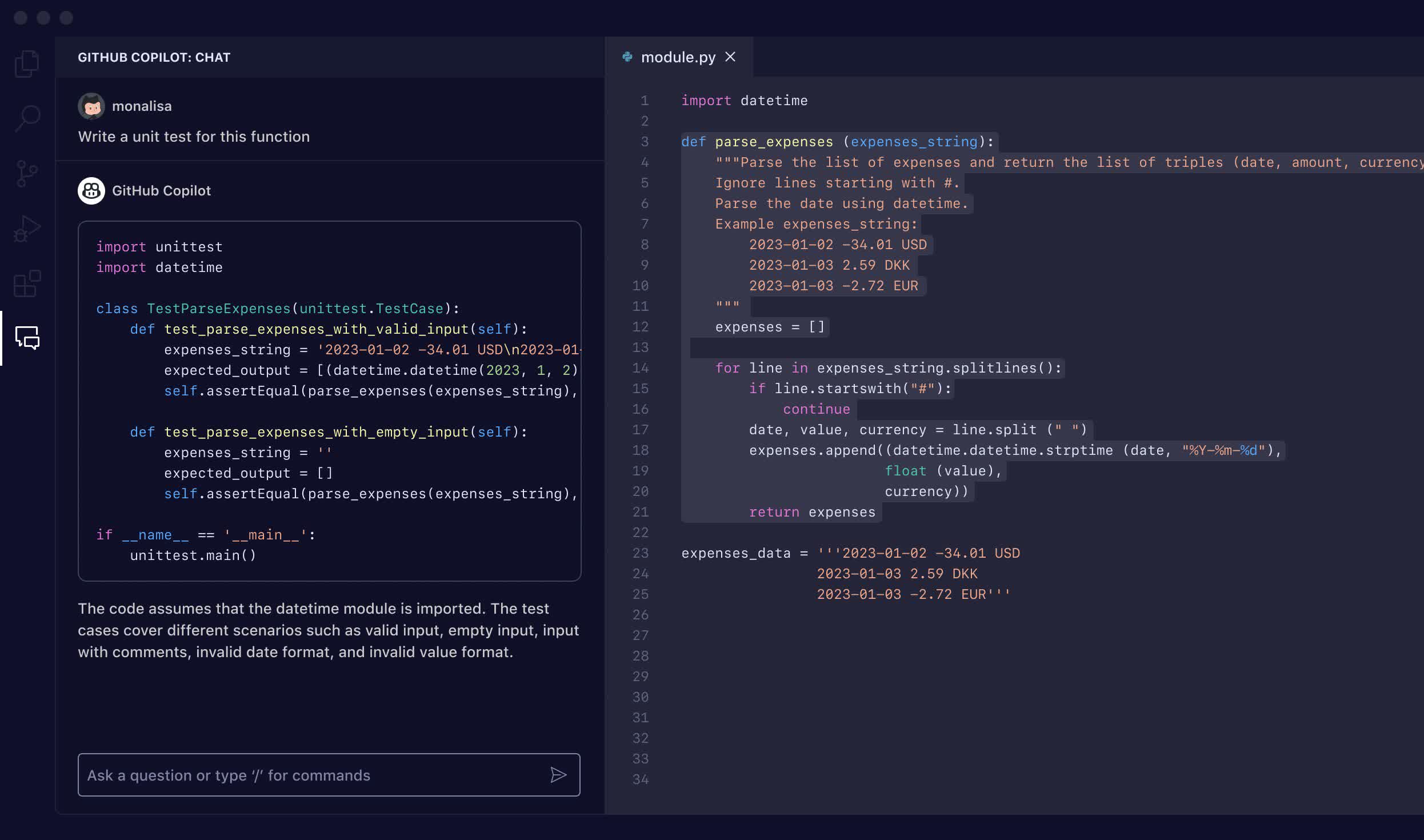

Tools like GitHub's Copilot are already doing the heavy-lifting for programmers – and their role in coding is only set to go upwards from here.

Another professor teaching at UC San Diego told the magazine that students need to practice skills like decomposing problems into digestible pieces for an AI. "It's hard to find where in the curriculum that's taught – maybe in an algorithms or software engineering class, but those are advanced classes. Now, it becomes a priority in introductory classes."

Professors are getting creative with assignment designs and grading methods. Daniel Zingaro at the University of Toronto Mississauga now has student groups submit video walkthroughs explaining their AI-generated programs, rather than just sharing code files. It allows him to evaluate their overall software engineering process.

Ooi says AI tools provide instructors flexibility to teach those higher-level skills like design, optimization, and considering ethics and user experience. Students' time is freed up from wrangling syntax.

But there are pitfalls to avoid with generative AI's current limitations around occasional hallucinations and lack of true understanding. Professors caution that students can't just blindly trust an AI's outputs; they must verify solutions themselves and maintain a skeptical mindset.

"We should be making AI a copilot – not the autopilot – for learning," Johnny Chang, a teaching assistant at Stanford University, advises in the report. Critical thinking matters and overreliance on AI tools can "short-circuit" the learning process.

There's optimism, though, that a proactively evolving pedagogy like this will pay dividends by better bridging the gap between classroom lessons and real-world job demands.

Tech CEOs have maintained similar (albeit harsher) views to these professors. Nvidia's Jensen Huang suggests coding skills may become obsolete in the near future, thanks to AI. If true, cultivating higher reasoning abilities over syntax knowledge could prove crucial for future job prospects. Emad Mostaque of Stability AI even predicted last year that most outsourced coders in India will be replaced by AI assistants by 2025.