Why it matters: You cannot talk about technology today without tripping over artificial intelligence (AI). Literally every conversation, in every corner of tech, the topic pops up. We agree that AI is useful, and important, but we also think it worth periodically taking some time to decode what everyone is talking about.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

Part of the problem rests in the fact that AI has become a term owned by the marketing department. For the average, non-tech person, AI sounds almost magical, conjuring images of talking robots and computers that tell jokes. The idea resonates at a deep level with everyone's visions of our science fiction future. At the same time, almost everyone who actually works with AI has much more modest notions of what AI can actually accomplish. As the saying goes, Statistics programming is written in R, Machine Learning is written in Python, AI is written in PowerPoint.

As the saying goes, Statistics programming is written in R, Machine Learning is written in Python, AI is written in PowerPoint.

That being said, there are some very useful ways in which AI algorithms are permeating into daily life. AI systems are really good at pattern detection which is useful in many ways. Those in tech recognize that AI methods have allowed the industry to make giant strides in image recognition and natural language processing, but there are many other use cases.

The one most familiar to everyone is image processing on our phones. Most smartphones today process all photo and video images with a variety of AI algorithms - image stabilization, auto focusing, blur adjustment, and all the rest of tricks. Industrial use cases are also numerous. For example, the oil and gas industry operate some of the largest supercomputing clusters to parse through mountains (literally) of geological sensing data to search for reserves to drill, and AI can greatly facilitate that process.

John Deere does immense amounts of AI work, not only for autonomous tractors and combines, but for weather prediction to better prepare its sales and service logistics. This list goes on, but it gets to the point that it starts to lose meaning, because all of these things are just software. We find that whenever someone mentions AI, we substitute the word 'software' and usually their meaning is unchanged. AI is just a way to solve a particular set of computer science problems very efficiently.

If this is true, it then opens up the question as to what is the best way to put AI in things.

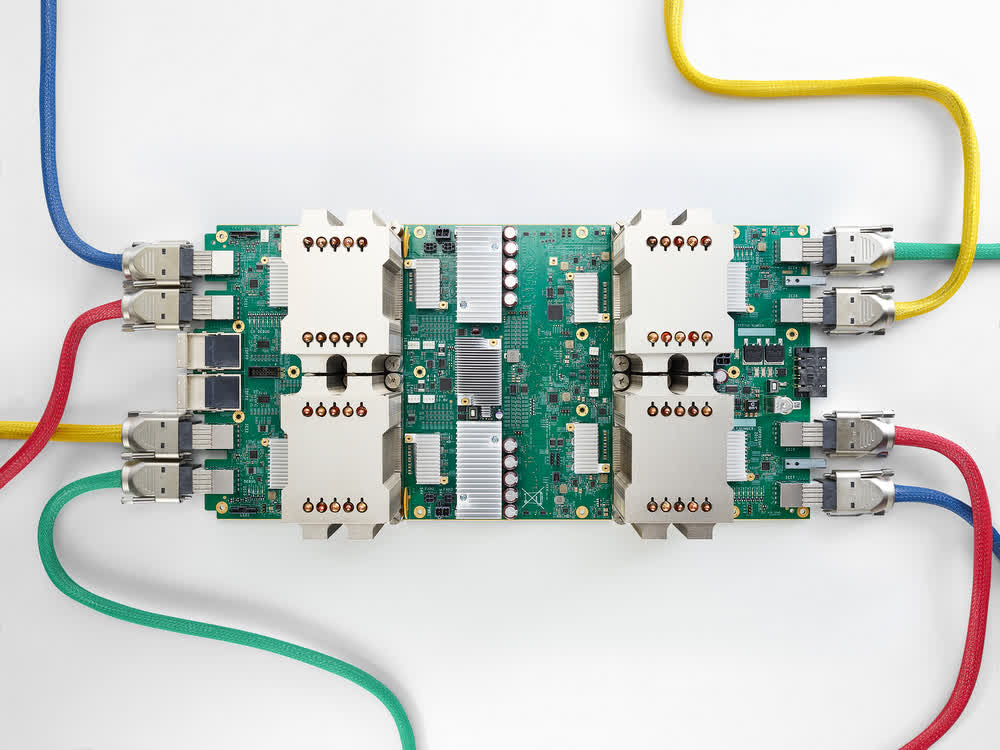

Google has pioneered one approach - designing their own TPU chip that only does AI math at a very large scale. Others are now taking similar approaches with several dozen companies (large and startups) designing chips for AI in the data center. But this special-purpose chip does not make sense for many other devices outside the data center. Again, the smartphone is a good example. Here power and more importantly space are highly constrained. The average smartphone does a lot of AI work, but there is no room for a standalone AI chip. This is true for video cameras, speakers and microphones, and even we would argue for cars at this stage. Today's cars, which do not have high level autonomy need a bit of AI for some things, but a standalone chip is likely too costly given the limited applications it runs.

This makes things very challenging for chip designers. Every customer's AI needs are a little different, which means AI chips - whether from startups or established companies - all have to be somewhat custom. Chip designers really do not like taking risks on custom chips absent hard purchase orders from customers, which they still loathe to provide.

This is prompting a range of responses. Many companies are choosing to take the default path and buying GPUs from Nvidia, the lowest common denominator. Others are purchasing general purpose processors which have some AI blocks built in, as is the case with many of the automotive companies buying from Qualcomm.

Still, others are designing their own chips. This is important because it opens the door for a new class of companies - the IP providers. There are now a handful of companies providing AI building blocks for others to incorporate into their homegrown chips. This practice of licensing IP into chips is not new, but the growth of AI is a major opportunity which is attracting considerable interest. This process is heightened by the growing trend towards systems on a chip (SoCs) which all sorts of electronics, industrial and automotive companies are adopting in this world of roll-your-own silicon.

AI is clearly something different. Its inclusion in an ever-growing array of electronics is an important trend, and few would deny its utility. But the nature of AI - both fairly constrained around a small number of operations, and yet highly varied in implementation - means that the nature of the semis business supplying AI is ripe for change. A good opportunity for those ready to capitalize on the opportunity.