AI chips serve two functions. AI builders first take a large (or truly massive) set of data and run complex software to look for patterns in that data. Those patterns are expressed as a model, and so we have chips that "train" the system to generate a model.

Then this model is used to make a prediction from a new piece of data, and the model infers some likely outcome from that data. Here, inference chips run the new data against the model that has already been trained. These two purposes are very different.

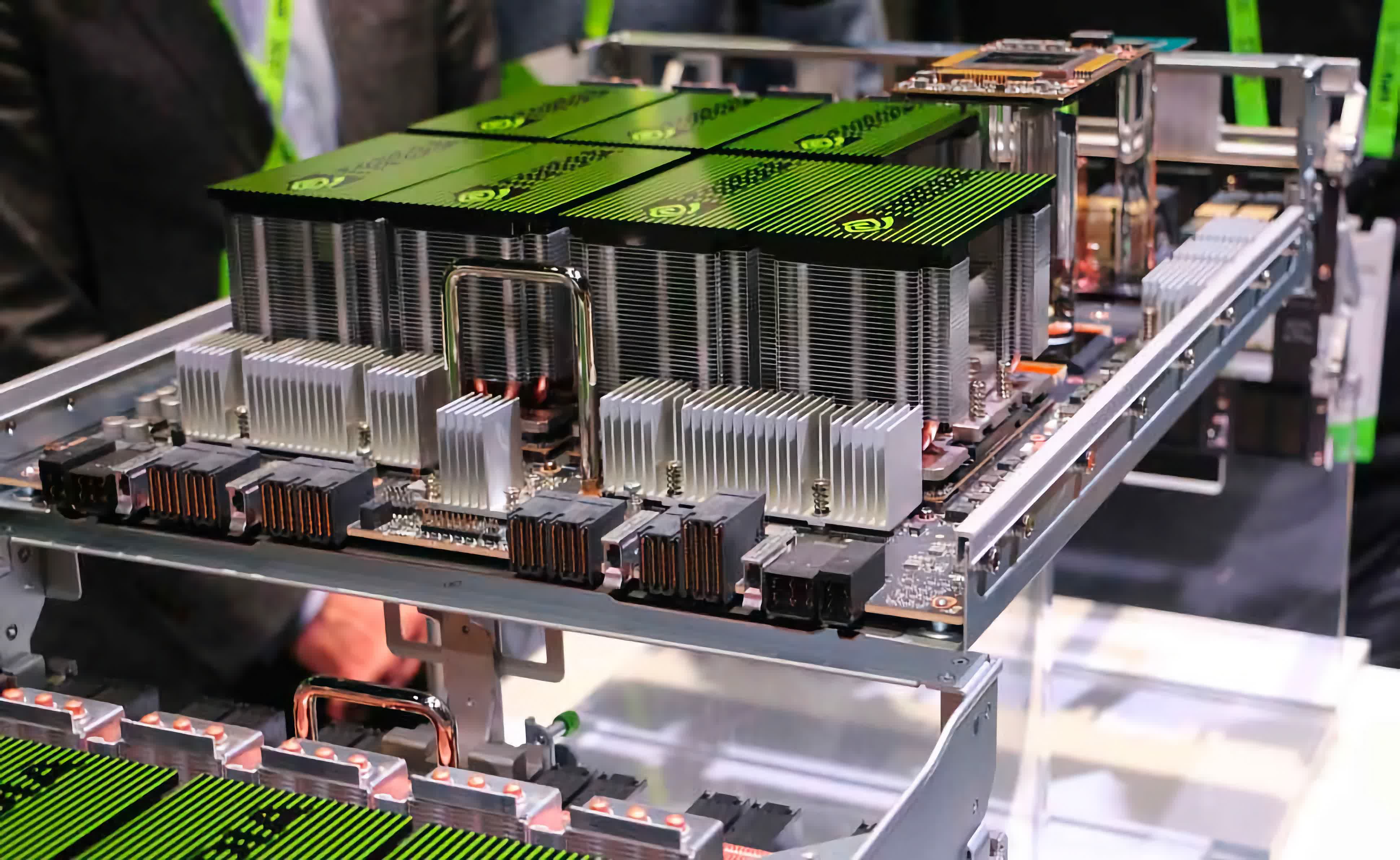

Training chips are designed to run full tilt, sometimes for weeks at a time, until the model is completed. Training chips thus tend to be large, "heavy iron."

Inference chips are more diverse, some of these are used in data centers, others are used at the "edge" in devices like smartphones and video cameras. These chips tend to be more varied, designed to optimize different aspects like power efficiency at the edge. And, of course, there all sorts of in-between variants. The point is that there are big differences between "AI chips."

For chip designers, these are very different products, but as with all things semiconductors, what matters most is the software that runs on them. Viewed in this light, the situation is much simpler, but also dizzyingly complicated.

Simple because inference chips generally just need to run the models that come from the training chips (yes, we are oversimplifying). Complicated because the software that runs on training chips is hugely varied. And this is crucial. There are hundreds, probably thousands, of frameworks now used for training models. There are some incredibly good open-source libraries, but also many of the big AI companies/hyperscalers build their own.

Because the field for training software frameworks is so fragmented, it is effectively impossible to build a chip that is optimized for them. As we have pointed out in the past, small changes in software can effectively neuter the gains provided by special-purpose chips. Moreover, the people running the training software want that software to be highly optimized for the silicon on which it runs. The programmers running this software probably do not want to muck around with the intricacies of every chip, their life is hard enough building those training systems. They do not want to have to learn low-level code for one chip only to have to re-learn the hacks and shortcuts for a new one later. Even if that new chip offers "20%" better performance, the hassle of re-optimizing the code and learning the new chip renders that advantage moot.

Which brings us to CUDA – Nvidia's low-level chip programming framework. By this point, any software engineer working on training systems probably knows a fair bit about using CUDA. CUDA is not perfect, or elegant, or especially easy, but it is familiar. On such whimsies are vast fortunes built. Because the software environment for training is already so diverse and changing rapidly, the default solution for training chips is Nvidia GPUs.

The market for all these AI chips is a few billion dollars right now and is forecasted to grow 30% or 40% a year for the foreseeable future. One study from McKinsey (maybe not the most authoritative source here) puts the data center AI chip market at $13 billion to $15 billion by 2025 – by comparison the total CPU market is about $75 billion right now.

Of that $15 billion AI market, it breaks down to roughly two-thirds inference and one-third training. So this is a sizable market. One wrinkle in all this is that training chips are priced in the $1,000's or even $10,000's, while inference chips are priced in the $100's+, which means the total number of training chips is only a tiny share of the total, roughly 10%-20% of units.

On the long term, this is going to be important on how the market takes shape. Nvidia is going to have a lot of training margin, which it can bring to bear in competing for the inference market, similar to how Intel once used PC CPUs to fill its fabs and data center CPUs to generate much of its profits.

To be clear, Nvidia is not the only player in this market. AMD also makes GPUs, but never developed an effective (or at least widely adopted) alternative to CUDA. They have a fairly small share of the AI GPU market, and we do not see that changing any time soon.

Also read: Why is Amazon building CPUs?

There are a number of startups that tried to build training chips, but these mostly got impaled on the software problem above. And for what it's worth, AWS has also deployed their own, internally-designed training chip, cleverly named Trainium. From what we can tell this has met with modest success, AWS does not have any clear advantage here other than its own internal (massive) workloads. However, we understand they are moving forward with the next generation of Trainium, so they must be happy with the results so far.

Some of the other hyperscalers may be building their own training chips as well, notably Google which has new variants of its TPU coming soon that are specifically tuned for training. And that is the market. Put simply, we think most people in the market for training compute will look to build their models on Nvidia GPUs.