Something we hear a lot is that CPU performance doesn't matter for 4K gaming. Without proper context, CPU benchmark data can be misinterpreted. Let us explain to you what's what with some hard data.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

"CPUs Don't Matter For 4K Gaming"... Wrong!

- Thread starter Steve

- Start date

yRaz

Posts: 6,547 +9,803

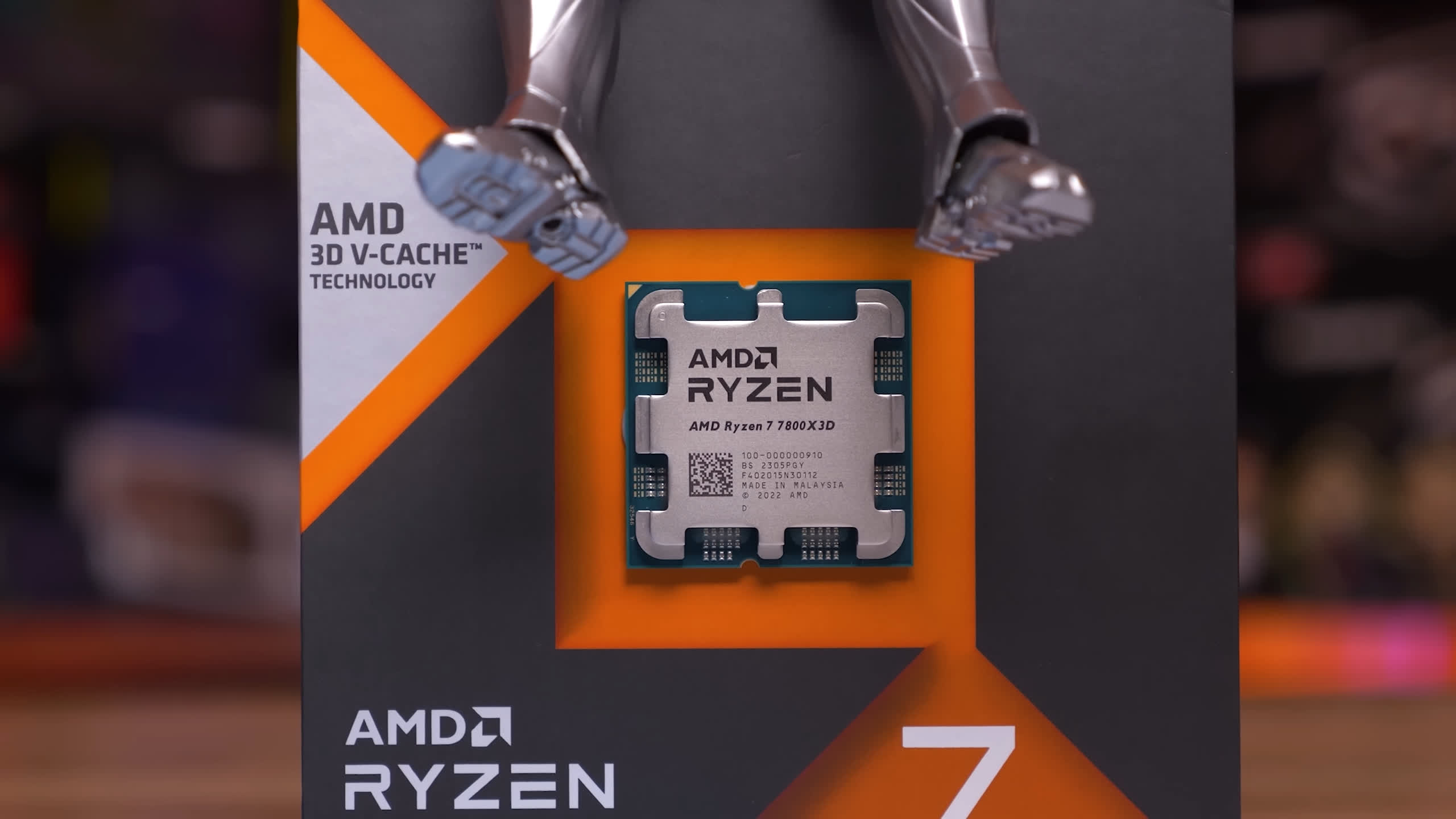

Be nice if you included a 5800x and a 5600. I get that the 3600 was a popular CPU, I even still have 2 1800x's running in my server room but, a 5600 or even a 5700x is a cheap upgrade for someone running a 3600. Anyone running a 3600 could drop in a 5800X3D for less thabln the cost of upgrading to a new mobo and 7600. And with everything I've seen, the 5800X3D is better than the 7600 in 90% of games.

First off, people playing at 2160p, like me, don't play at low, or medium settings. They play at max settings. So your data is not representative of a REAL Use Case.

Second off, if you look at your data, the only way to be CPU bound, and using max settings, is with a 4090.

Lastly, you just proven that if you don't use a 4090, you will likely see barely any improvement by playing at 2160p with a better CPU, so I don't understand the point of this article at all since EVERY time you made some CPU benchmarks, you LITERALLY disregarded any feedback requesting 2160p results because you were raising the point that you will be GPU bound. However, now you are going 180 degrees?

I can't follow your logic anymore.

Matter of fact is that if you play games at 2160p with a high end GPU;

Second off, if you look at your data, the only way to be CPU bound, and using max settings, is with a 4090.

Lastly, you just proven that if you don't use a 4090, you will likely see barely any improvement by playing at 2160p with a better CPU, so I don't understand the point of this article at all since EVERY time you made some CPU benchmarks, you LITERALLY disregarded any feedback requesting 2160p results because you were raising the point that you will be GPU bound. However, now you are going 180 degrees?

I can't follow your logic anymore.

Matter of fact is that if you play games at 2160p with a high end GPU;

- You will most likely play with really close to maximum graphical settings

- The title you are going to play will be GPU bound

Last edited:

Achaios

Posts: 499 +1,324

GPU's still have way to go. You can see a 4090 achieving 40 FPS at 4K ULTRA, this is ridiculous.

Regarding CPUs and 4k (or 3.5k), I have never played an FPS game and I don't think I ever will. FPS gamers who are after over 9000 FPS constitute a niche. I only play non-FPS games. I bet there are more gamers like me.

Gamers like me will do just fine with an old CPU. FPS gamers on the other hand already own a modern fast CPU, so I don't really get what is the point of the OP.

Regarding CPUs and 4k (or 3.5k), I have never played an FPS game and I don't think I ever will. FPS gamers who are after over 9000 FPS constitute a niche. I only play non-FPS games. I bet there are more gamers like me.

Gamers like me will do just fine with an old CPU. FPS gamers on the other hand already own a modern fast CPU, so I don't really get what is the point of the OP.

You didn't read the article...Be nice if you included a 5800x and a 5600. I get that the 3600 was a popular CPU, I even still have 2 1800x's running in my server room but, a 5600 or even a 5700x is a cheap upgrade for someone running a 3600. Anyone running a 3600 could drop in a 5800X3D for less thabln the cost of upgrading to a new mobo and 7600. And with everything I've seen, the 5800X3D is better than the 7600 in 90% of games.

"Now, we can already envision comments claiming that the data here is misleading because the Ryzen 5 3600 is so much slower than the 7800X3D, and if that's how you feel, we're sorry to say it, but you've missed the point. Yes, we did use an extreme example to illustrate the point we're trying to make, but the point is, it's the frame rate that's important, not the resolution."

However, I don't agree with Steve when the only way to fall into CPU bound situations at 2160p is by either using a 4090, or using low or medium settings.

Like I was explaining just before, if you play at 2160p, it is because you crave for image FIDELITY. Which mean you will likely use max settings AT ALL TIME, which also mean that your CPU will have almost no impact in your framerate at 2160p unless you have a 4090.

Game sample is way too small; once you get a 30+ game sample at 4k ultra/max even a 2008 i7 920 will get within 20% of an i7 13700KF with an RTX 4080 Super or RX 7900XT.

Been doing these kinds of tests for years; where you see the real difference at 4K is Core 2 Quads are usually trapped in to 30s FPS regardless of resolution.

Been doing these kinds of tests for years; where you see the real difference at 4K is Core 2 Quads are usually trapped in to 30s FPS regardless of resolution.

yRaz

Posts: 6,547 +9,803

I did read the article and CPU can have massive implications in gameplay, part of the reason I said something. If games with fidelity is all you play, naturally. I play EvE and ESO, I can play those games maxed at 4K. The problem is when there are lots of other players in large battles. This is a problem at all resolutions. There are games that aren't graphic lyrics demanding but incredibly CPU heavy.You didn't read the article...

"Now, we can already envision comments claiming that the data here is misleading because the Ryzen 5 3600 is so much slower than the 7800X3D, and if that's how you feel, we're sorry to say it, but you've missed the point. Yes, we did use an extreme example to illustrate the point we're trying to make, but the point is, it's the frame rate that's important, not the resolution."

However, I don't agree with Steve when the only way to fall into CPU bound situations at 2160p is by either using a 4090, or using low or medium settings.

Like I was explaining just before, if you play at 2160p, it is because you crave for image FIDELITY. Which mean you will likely use max settings AT ALL TIME, which also mean that your CPU will have almost no impact in your framerate at 2160p unless you have a 4090.

My biggest issue with these benchmarks is the lack of relevant CPU heavy games at 4k and the absurdity of running a 4090 with a 3600.

Burty117

Posts: 5,146 +3,777

First off, people playing at 2160p, like me, don't play at low, or medium settings. They play at max settings. So your data is not representative of a REAL Use Case.

Neither did you by the looks of it, they benched everything using different quality settings including High, Very High, Ultra etc...You didn't read the article...

Starfield and Hogwarts Legacy both clearly benefitted from a better CPU in their highest quality settings.

Adhmuz

Posts: 2,349 +1,196

This review may be addressing some comments lately regarding 4K benchmarks, but still feel it misses several points and artificially skews results in favor of the OP by artificially introducing a serious bottleneck in a situation that will likely never present itself.

Sure you slapped the R5 3600 community hard in the face with RTX 4090 results, however, I don't see them ever running 4090s to begin with, they'd have more than likely upgraded away from the 3600 long before dropping the absurd amount of money for a 4090.

Given the data only contains 2 CPUs, it's really hard to make any real conclusions in my use scenario, I've always purchased top tier CPUs and kept them for as long as possible, pairing them with multiple generations of upper mid range GPUs, because at the resolution (4K) and settings I use the CPU takes a really long time to become a bottleneck.

Sure you slapped the R5 3600 community hard in the face with RTX 4090 results, however, I don't see them ever running 4090s to begin with, they'd have more than likely upgraded away from the 3600 long before dropping the absurd amount of money for a 4090.

Given the data only contains 2 CPUs, it's really hard to make any real conclusions in my use scenario, I've always purchased top tier CPUs and kept them for as long as possible, pairing them with multiple generations of upper mid range GPUs, because at the resolution (4K) and settings I use the CPU takes a really long time to become a bottleneck.

Theinsanegamer

Posts: 5,550 +10,476

The point was to address the effect CPUs have at 4k, not how likely the build is. Why is this hard for people to understand? The 4k comments are all the same "well thats not a REALISTIC BUILD" or "why dont you test CPUs at 4k" BS.This review may be addressing some comments lately regarding 4K benchmarks, but still feel it misses several points and artificially skews results in favor of the OP by artificially introducing a serious bottleneck in a situation that will likely never present itself.

Sure you slapped the R5 3600 community hard in the face with RTX 4090 results, however, I don't see them ever running 4090s to begin with, they'd have more than likely upgraded away from the 3600 long before dropping the absurd amount of money for a 4090.

Given the data only contains 2 CPUs, it's really hard to make any real conclusions in my use scenario, I've always purchased top tier CPUs and kept them for as long as possible, pairing them with multiple generations of upper mid range GPUs, because at the resolution (4K) and settings I use the CPU takes a really long time to become a bottleneck.

fadingfool

Posts: 342 +433

It was never just "CPUs Don't Matter For 4K Gaming" (though we have all seen this declared) - issues have always been more nuanced than that. I have a aging CPU with a Radeon RX 7800XT - and yes I know with a better CPU alone I could gain an extra 10%-15% performance from my GPU (synthetic testing). But I am also gaming at 1440p 75Hz so as the 1440p results above suggest I may as well turn on all the bells and whistles until I see a drop in performance (and in some cases this may mean turning up to 4k and using virtual super resolution) to the 75Hz point. So in some ways I am attacking a frame rate and adjusting settings to match (as I believe most gamers do) rather than attack a resolution and see how many frames I can get. Next upgrade will probably be a motherboard/CPU/RAM combo but that would also require a new monitor to appreciate the upgrade so will be sometime yet. The more scenarios tested the better the guage on performance so I truely appreciate the work Steve and the team do at Hardware unboxed even if I can only dream of having the parts at my finger tips to maximise my PC's performance.

kira setsu

Posts: 763 +837

its not quite true that if youre playing at 4k you'd be using max settings.First off, people playing at 2160p, like me, don't play at low, or medium settings. They play at max settings. So your data is not representative of a REAL Use Case.

Second off, if you look at your data, the only way to be CPU bound, and using max settings, is with a 4090.

Lastly, you just proven that if you don't use a 4090, you will likely see barely any improvement by playing at 2160p with a better CPU, so I don't understand the point of this article at all since EVERY time you made some CPU benchmarks, you LITERALLY disregarded any feedback requesting 2160p results because you were raising the point that you will be GPU bound. However, now you are going 180 degrees?

I can't follow your logic anymore.

Matter of fact is that if you play games at 2160p with a high end GPU;

- You will most likely play with really close to maximum graphical settings

- The title you are going to play will be GPU bound

one of the biggest benefits to 4k gaming is clarity, even when a game is at its lowest settings, just being viewed @4k is a massive visual bump, and I know alot of people who play @4k but at low or medium settings because the clarity is an edge.

compare a game like ff14 being played at 1080p or 4k, theres alot of text on the screen and the game isnt particularly heavy to run(im ignoring the upcoming graphics update) its lowest settings may not look the best visually on the characters and world but at 4k far as text, hotbars, minimap etc its a massive jump.

Adhmuz

Posts: 2,349 +1,196

Why is it hard to understand people WANT realistic data? Not a condescending attempt at proving a point that means next to nothing to most people?The point was to address the effect CPUs have at 4k, not how likely the build is. Why is this hard for people to understand? The 4k comments are all the same "well thats not a REALISTIC BUILD" or "why dont you test CPUs at 4k" BS.

No kidding the 7800X3D delivers more FPS than a multi generation old mid tier CPU when pair with the two fastest GPUs available, I would never argue that.

And in fact, the real take away here is, the R5 3600 can still run the games shown at 4K 60 FPS avg, which is impressive on it's own, so kudos for that information I suppose.

Kn0xx

Posts: 52 +57

Thank you for the data provided, its test case scenario for a point. For me it shows that low-end CPU can't sustain mid-tier and above GPU's, regardless the resolution.... and not "just for 1080p".

Probably the people with their own plot methods can provide a better "real case scenario".

Probably the people with their own plot methods can provide a better "real case scenario".

Marco Mint

Posts: 89 +98

The thing I notice after upgrading to a better cpu is not fps, but the smoothness of game play. Maybe they do increase the fps, but I don't notice that. It's the motion that seems to be much better.

Even back in the day on crysis when I upgraded from a highly overclocked 2600k to a 4790k and same GPU. What I noticed was that it was silky smooth after and I hadn't noticed any issues before. You would think of a game like Crysis noticeably benefiting more from a new GPU.

Same thing happened when I upgraded to my 7950X. Games like 7 days to die just moved along way smoother. Maybe the ram amount and speed helps too, as a new system obviously means new, faster ram. I always double the ram amount with each build too it seems.

Even back in the day on crysis when I upgraded from a highly overclocked 2600k to a 4790k and same GPU. What I noticed was that it was silky smooth after and I hadn't noticed any issues before. You would think of a game like Crysis noticeably benefiting more from a new GPU.

Same thing happened when I upgraded to my 7950X. Games like 7 days to die just moved along way smoother. Maybe the ram amount and speed helps too, as a new system obviously means new, faster ram. I always double the ram amount with each build too it seems.

colossusrage

Posts: 34 +37

I think a lot of you are making arguments against things that weren't tested or brought up in this article. The test was whether the statement "CPUs don't matter for 4K gaming..." I think they proved that a CPU can matter in 4K gaming. Though every game chosen utilizes the CPU quite heavily, it's clear that a CPU can matter in 4K gaming.

Now, does that necessarily make the statement "CPUs don't matter as much in 4K gaming" false? No. But that's another test.

Now, does that necessarily make the statement "CPUs don't matter as much in 4K gaming" false? No. But that's another test.

Skjorn

Posts: 838 +697

Hogwarts is the one of worst performing AAA UE4 games how in the hell could you figure any data from that game is reliable when you can lose half your framerate just walking down a hallway. Starfield never ran very good either.

CS2 much more popular.

I'd rather see CPU and RT benchmarks. RT is much heavier on the CPU than gets talked about.

There's more useful info in CPU/RT than how much will a 3600 bottleneck a 4090.

CS2 much more popular.

I'd rather see CPU and RT benchmarks. RT is much heavier on the CPU than gets talked about.

There's more useful info in CPU/RT than how much will a 3600 bottleneck a 4090.

Starfals

Posts: 32 +26

Exactly, everyone that I know has a 5600 and a 5800X. I wish they did so it can clearly show the difference to these friends/people. Anyways, I got a 7800X3D now and my whole PC is much smoother and faster. I came from a 4Core CPU, that could barely hit 3.4 speed. Quite the upgrade id say, totally worth it too, shame I had to pay 650 euro for it. Now its down to 350.Be nice if you included a 5800x and a 5600. I get that the 3600 was a popular CPU, I even still have 2 1800x's running in my server room but, a 5600 or even a 5700x is a cheap upgrade for someone running a 3600. Anyone running a 3600 could drop in a 5800X3D for less thabln the cost of upgrading to a new mobo and 7600. And with everything I've seen, the 5800X3D is better than the 7600 in 90% of games.

I literally used Steve own statement. You are missing the point that this is not a review but just a demonstration that you can be CPU bound at 2160p.I did read the article and CPU can have massive implications in gameplay, part of the reason I said something. If games with fidelity is all you play, naturally. I play EvE and ESO, I can play those games maxed at 4K. The problem is when there are lots of other players in large battles. This is a problem at all resolutions. There are games that aren't graphic lyrics demanding but incredibly CPU heavy.

My biggest issue with these benchmarks is the lack of relevant CPU heavy games at 4k and the absurdity of running a 4090 with a 3600.

My point is that you don`t play at 2160p to play at low or medium settings. You are better off dropping your resolution a tier than doing that, meaning all the low and medium numbers at 2160p are biased toward a proof of concept and not a REAL Use Case.

And when it comes to High/Ultra settings, unless you are having a 4090, any other GPU is going to provide you similar FPS regardless of the CPU.

You don`t play games at 2160p low settings with an enthusiast GPU...its not quite true that if youre playing at 4k you'd be using max settings.

one of the biggest benefits to 4k gaming is clarity, even when a game is at its lowest settings, just being viewed @4k is a massive visual bump, and I know alot of people who play @4k but at low or medium settings because the clarity is an edge.

compare a game like ff14 being played at 1080p or 4k, theres alot of text on the screen and the game isnt particularly heavy to run(im ignoring the upcoming graphics update) its lowest settings may not look the best visually on the characters and world but at 4k far as text, hotbars, minimap etc its a massive jump.

You will not make me believing this nonsense.

Go back looking at the numbers and look back at my comment.Neither did you by the looks of it, they benched everything using different quality settings including High, Very High, Ultra etc...

Starfield and Hogwarts Legacy both clearly benefitted from a better CPU in their highest quality settings.

It is only true either at low or medium settings, OR with a 4090 when at 2160p.

There is no difference at High/Ultra with an XTX at 2160p.

Not to mention these are two games that differ from the norm which Steve used to prove a point. In the majority of the case, you will be GPU bound at 2160p. The data is all over the internet.

Man does tests to examine a single, very specific topic.

Man posts results to the internet.

Commenters argue all their personal favorite topics not addressed by the specific test.

The internet at its finest.

Man posts results to the internet.

Commenters argue all their personal favorite topics not addressed by the specific test.

The internet at its finest.

yRaz

Posts: 6,547 +9,803

No, it seems that you are missing mine. There are gaming applications outside what was benchmarked that show CPUs are very important for things outside of gaming fidelity and to ignore that is careless amd unprofessional. Go play a city builder, strategy game or basically anything physics heavy.I literally used Steve own statement. You are missing the point that this is not a review but just a demonstration that you can be CPU bound at 2160p.

My point is that you don`t play at 2160p to play at low or medium settings. You are better off dropping your resolution a tier than doing that, meaning all the low and medium numbers at 2160p are biased toward a proof of concept and not a REAL Use Case.

And when it comes to High/Ultra settings, unless you are having a 4090, any other GPU is going to provide you similar FPS regardless of the CPU.

You can play EvE at 4k max settings on a 1060, but enter an area with lots of things going on? In large battles it can bring my 5800x to its knees.

The benchmarks don't address enough data points to make a proper point. at first it wasn't a big deal to me but you've shown yourself to be quite a pest around the forms. I don't care who you're quoting, that isn't an excuse for you to continue to defend you lack of analytical abilities

Last edited:

Similar threads

- Replies

- 28

- Views

- 604

- Replies

- 27

- Views

- 359

- Replies

- 30

- Views

- 705

Latest posts

-

Multi-day DDoS attack targets Internet Archive and Wayback Machine

- Sir Sparkles replied

-

Windows 11 LTSC 2024 for IoT devices includes very permissive hardware requirements

- Sir Sparkles replied

-

Microsoft aims to make Edge faster with the WebUI 2.0 project

- Carlos GarPov replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.