As CPUs and GPUs continue to get more powerful with each new generation, the push for ever more realistic graphics in blockbuster games shows no signs of slowing down. Today's best-looking titles already look stunning, so how much better can they possibly get?

Which technologies will become as commonplace as texture filtering or normal mapping is today? What systems will help developers reach these higher standards? Join us as we take a look at what awaits us in the future of 3D graphics.

Where do we come from?

Before we head off into the future, it's worth taking stock of the advances in 3D graphics over the years. Much of the theoretical aspects of 3D rendering (e.g. vertex transformations, viewport projections, lighting models) are decades old, if not older.

Take the humble z-buffer, as an example. This is nothing more than a portion of memory used to store depth information about objects in a scene and is primarily used to determine whether or not a surface is hidden behind something else (which in turn allows objects to be discarded, instead of rendering them and can also be used to generate shadows).

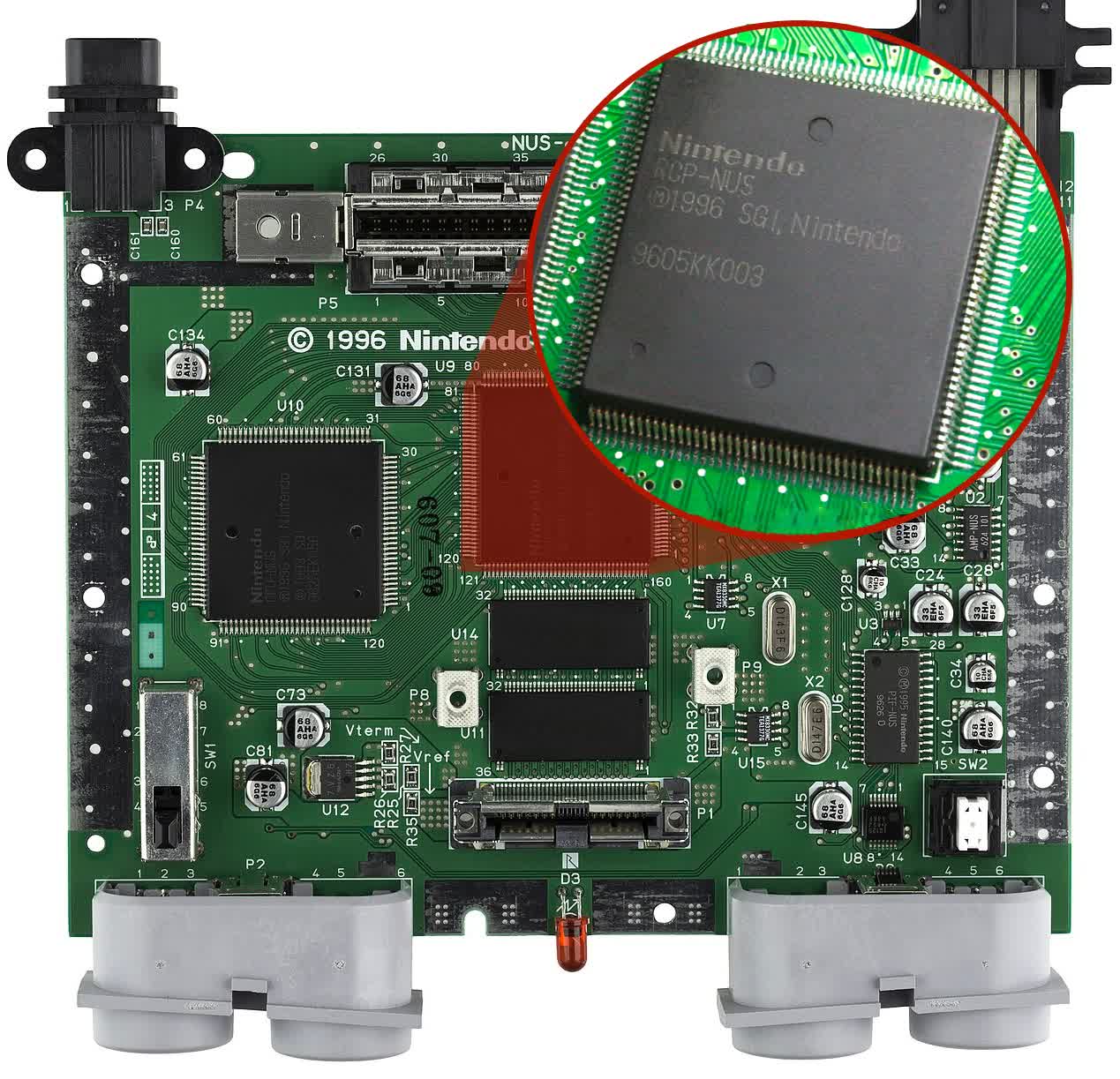

The concept of the z-buffer is generally attributed to Ph.D. student Wolfgang Straßer, 1974, working at TU Berlin at the time. The first commercial hardware to make use of the buffer appeared within 5 years or so, but the general public would have to wait over 20 years, until the mid-90s, for the arrival of the Nintendo 64 and its z-buffer enabled Reality co-processor.

The same is true for other rendering standards: Gouraud shading (Henri Gouraud, 1971); texture mapping (Edwin Catmull, 1974); bump mapping (Jim Blinn, 1978). It would be decades before any casual gamer got to see these things in action on home entertainment systems.

And it was the likes of Sony, Sega, and Nintendo that did this, with their 3D-focused consoles. By the standards of today, games for those early machines, such as the first PlayStation, were primitive in the extreme, but developers were still getting to grips with 'modern' rendering.

At the same time, PC hardware vendors were also getting in on the 3D act, and in just 5 years, desktop computers around the world were sporting graphics cards boasting support for shaders, z-buffers, texture mapping, et al.

It would be the evolution of graphics chips that would drive the development of 3D graphics, but predicting how games might look in the near future was somewhat tricky, despite the obvious path that GPUs would take.

One company tried to give it a go and MadOnion (later Futuremark) attempted to show everyone what graphics may look like with 3DMark, based on feedback they received from other developers and hardware vendors. But all their efforts did was demonstrate that the evolution in graphics, both software and hardware, was too rapid to accurately predict just how things were going to turn, at that time.

Everything that could be measured in numbers was increasing at a frantic rate. In the early days of 3D games, the number of polygons per frame was often used as a selling point, but now it never gets mentioned, because it's just a ridiculous amount.

For example, the first Tomb Raider running on the PlayStation used 250 triangles for Lara, whereas the PC version of Shadow of the Tomb Raider can use up to 200,000 for the main character.

Texture size and the number of textures used have ballooned each year, too, and we're now at the point where even just an HD texture pack can be the same size as the rest of the game's assets combined (take a step forward, Far Cry 6).

This constant increase of polygons and textures is an unfortunate necessity for the search for ever more realistic, or more rather, more detailed graphics.

While there's still plenty of ongoing research into procedural texture generation (which uses algorithms to generate textures on the fly) and other shortcuts, the traditional use of bitmap images for textures, along with vertices and indices for models, isn't going to disappear any time soon.

So we can start our journey into the future by being certain about one thing.

Numbers: They will just keep on getting bigger

(and smaller)

Epic Games showcased Unreal Engine 5 features to much fanfare two years ago, and the first to hit the spotlight was a new geometry system, called Nanite. The system works in a separate rendering pass, and streams meshes in and out of a scene, depending on visibility.

The developers also leverage a software-based rasterizer, for triangles smaller than a pixel, to offload some of the triangle setup costs from the GPU. Like all brand new technologies, it's not perfect and there are several limitations, but the PlayStation 5 technology demo clearly showed what awaits us in the future.

A typical mesh in this demo packs over 1 million polygons, for a memory footprint of 27 MB (including all textures), and a render time of 4.5 milliseconds for culling, rasterizing, and material evaluation. On face value, those figures may not mean very much, but the infamous Crysis was fielding up to 2 million polygons for entire scenes.

Over the years, there have been many methods for increasing the number of triangles used to create objects and environments, such as tessellation and geometry shaders, all of which help to improve the natural detail of the models.

But Epic's Nanite system is a significant step forward, and we can expect other developers to create similar systems.

The incredibly high levels of detail seen in some games right now will become widespread in the future. Rich, fulsome scenes will not just be the preserve of expensive AAA titles.

Textures will continue to grow in size and quantity. Models in today's games now require a whole raft of texture maps (e.g. base color, normal, displacement, specular, gloss, albedo) to make the object look exactly as the artists intend it to.

Monitors are now at the stage where 1440p or 4K is far more affordable, and there are plenty of graphics cards that run pretty well at the former resolution. So with frames now composed of several million pixels, textures have to be equally high in resolution, to ensure they don't become blurry when stretched out, over the model.

While we're not going to see 4K gaming becoming mainstream just yet, the future will be all about fine detail, hence there will continue to be growth in the memory footprint of textures.

But millions of polygons, all wrapped in 16K textures, would be useless (or certainly not very realistic) if they weren't lit and shadowed correctly. Simulating how light interacts with objects in the real world, using computed 3D graphics, has been a subject of intense research and development for nearly 50 years.

Graphics cards are designed to calculate, en masse, the results of a raft of algorithms, each one critical in achieving the goal of "as real as possible." But for many years, GPUs didn't have anything dedicated to this task. They were, and still mostly are, nothing more than numerous floating-point ALUs, coupled with a lot of cache, with multiple collections of units for handling data.

That was until four years ago when Nvidia decided the time was ripe to head back to the 1970s, again, with a nod to the work of Turner Witted, and provide hardware support to accelerate aspects of the holy grail of rendering.

Ray tracing will be everywhere

Traditional 3D graphics processing is essentially a collection of shortcuts, hacks, and tricks to give the impression that you're looking at a genuine image of a real object, lit by real light sources. Ray tracing isn't reality, of course, but it's far closer to it than what's generally termed "rasterization" – even though rasterization is still a key part of ray tracing, as are a multitude of other algorithms.

We're now at the stage where every GPU vendor has hardware with ray tracing support, as well as the latest consoles from Microsoft and Sony. And even though the first games that provided a spot of ray tracing, for shadows or reflections, weren't anything to shout about, there are some titles out there that highlight its potential.

Despite the performance hit that applying ray tracing to games invariably produces, there's no doubt that it will eventually become as ubiquitous as texture mapping.

The signs for this are all around – by the end of this year, Nvidia will have three generations of GPUs with ray tracing acceleration, AMD will have two, and Intel with one (although there are rumors that its integrated GPU will sport it, too).

The two most commonly used game development packages, Unreal Engine and Unity, both have ray tracing support built into their latest versions, utilizing the ray tracing pipeline in DirectX 12. Developers of other graphics systems, such as 4A Engine (Metro Exodus), REDengine (Cyberpunk 2077), and Dunia (Far Cry 6) have integrated ray tracing into older engines, with varying degrees of success.

One might argue that ray tracing isn't needed, as there are plenty of games with incredible visuals (e.g. Red Dead Redemption 2) that work fine without it.

But if one leaves the hardware demands aside for a moment, using ray tracing for all lighting and shadows is a simpler development task, than having to use multiple different strategies to achieve similar imagery.

For example, the use of ray tracing negates the need to use SSAO (Screen Space Ambient Occlusion) – the latter is relatively light in terms of shaders, but very heavy on sampling and then blending from the z-buffer. Ray tracing involves a lot of data reading, too, but with a large enough local cache, there's a lower hit on the memory bandwidth.

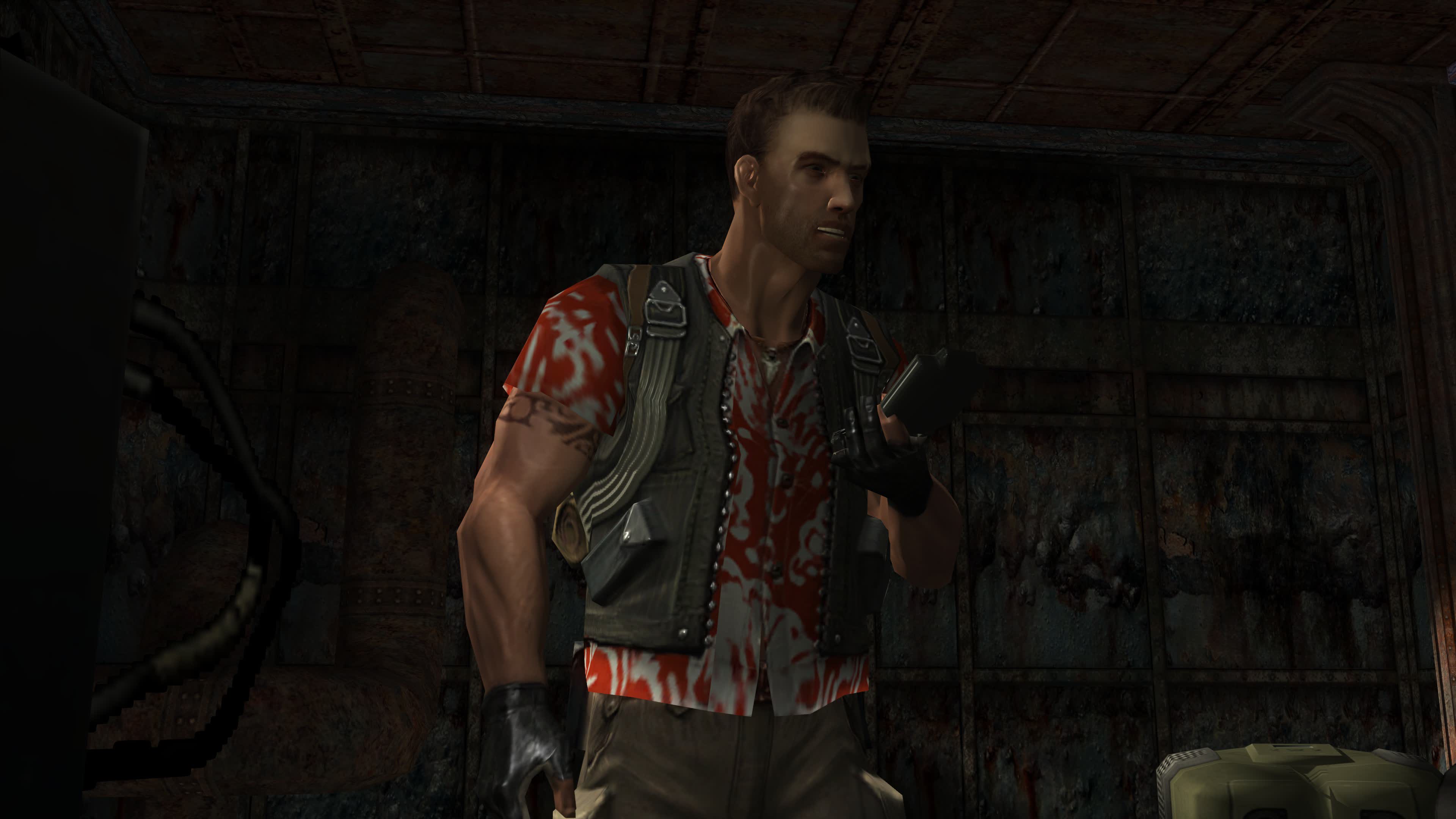

Ray tracing isn't just about making graphics look ever more realistic, though. It can add a greater sense of immersion to a game, when implemented properly. Take Remedy's 2019 title Control as an example of this: it looks and plays perfectly well without ray tracing, but with it fully enabled, the gameplay is transformed.

Moving the character through scenery, traveling through shadow and light, catching movement in reflections off glass panes – the tension and atmosphere are notably amplified when ray tracing is employed. Simple screenshots do it no justice.

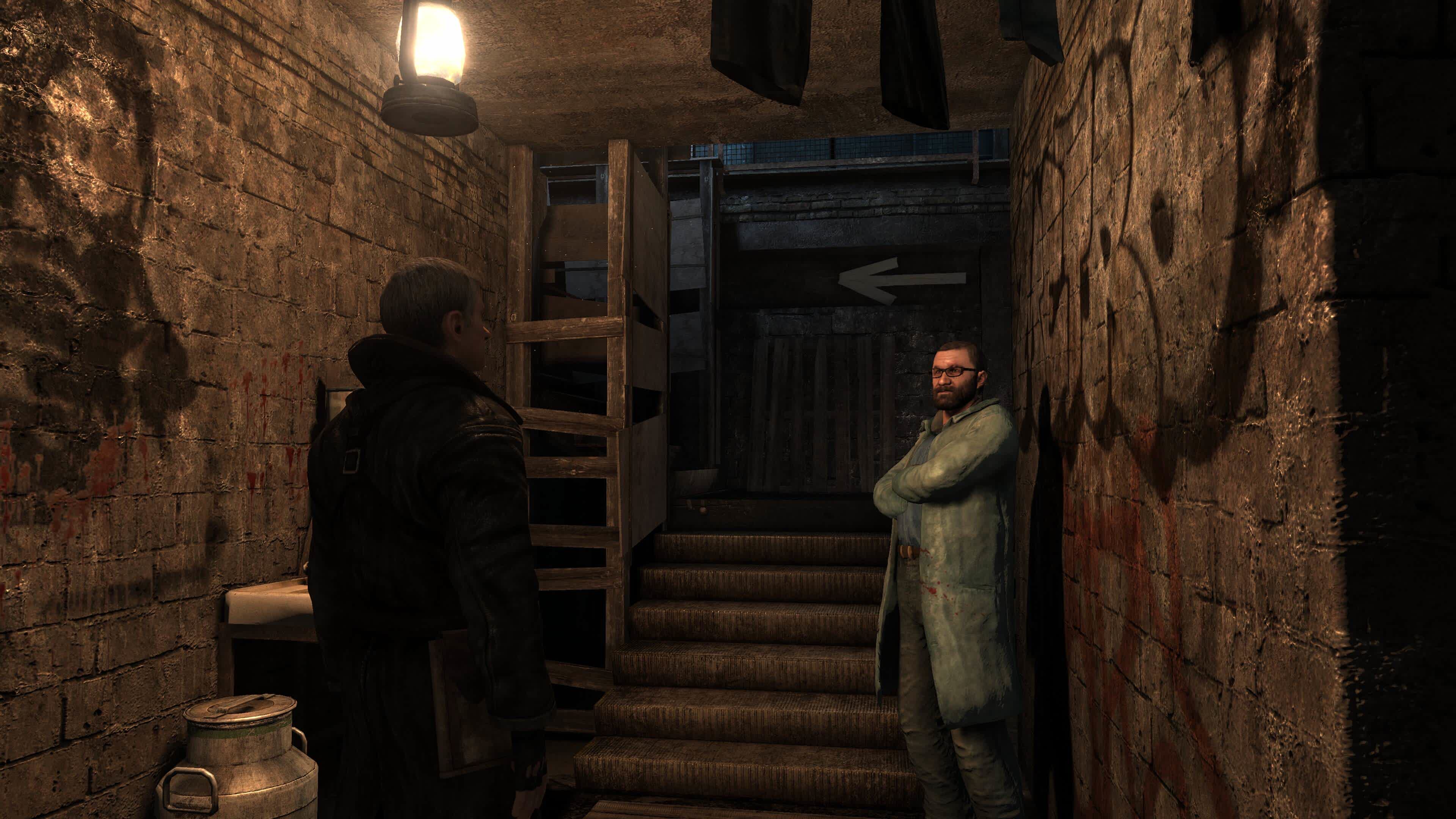

And there are plenty of game styles that will benefit from the improvement to global illumination and shadowing. Survival horror and stealth-based action-adventure titles are the obvious choices, but motorsport simulators will do, too, especially those that offer night races or endurance modes.

Even humbler games such as Minecraft take on a new light (pun not intended) when ray tracing is used to determine the illumination, transparency, reflections, and shadows of every surface in the game world.

Current implementations of ray tracing in games is relatively limited – it's typically just used for shadows and/or reflections, but the few that use it for everything show its clear potential.

So how much better will it get; what can we expect to see in games in 5 or 10 years time? Let's begin by looking at a benchmarking test sample for Blender.

The ray and sample count were both significantly increased over the default settings, along with a few additional enhancements, so this took over 9 mins to render, using a GeForce RTX 2080 Super.

At first glance, you might be forgiven for thinking that this isn't anything special but it's the subtlety in the shadowing, the light transmission through the glass, reflections off the pictures on the wall, and the smooth transition of color grading across the floor that shows what's capable with ray tracing.

It's clearly CGI, rather than coming across as "real," but it's a level of graphics fidelity that developers and researchers are pushing for.

But even the best technology demos from GPU vendors can't quite match this yet, at least not in any kind of playable frame rate. Below is a screenshot of Nvidia's Attic demo, which uses a modified version of Unreal Engine 4, with ray-traced direct and global illumination.

It doesn't look anywhere near as good as the Blender example, but the render time for each frame was 48 milliseconds – not 9 minutes!

But even with all of the rendering trickery employed to speed things up, ray tracing is still pretty much the preserve of high-end graphics cards. PC gamers with mid-range or budget models, at the moment, have one of two options: enable ray tracing but drop down every other setting to make it run okay (negating the point of using it) or just leave the setting off entirely.

So you might think the idea of ray tracing being everywhere, for everyone, is decades away – just like it was for, say, the invention of normal mapping or tessellation to make an appearance in all GPUs.

However, there's something else that's almost certainly going to be a standard feature in 3D gaming graphics and it will help to solve the aforementioned problem.

Upscaling will become the norm

When Nvidia released the Turing architecture in 2008, sporting new hardware to accelerate BVH searching and triangle-ray intersection calculations, they also promoted another new feature of the GPU: tensor cores. These units are essentially a collection of floating point ALUs, that multiply and add values in a matrix.

These actually made their first appearance a year earlier, in the GV100-powered Titan V. This graphics card was aimed exclusively at the workstation market, with its tensor cores being used in AI and machine learning scenarios.

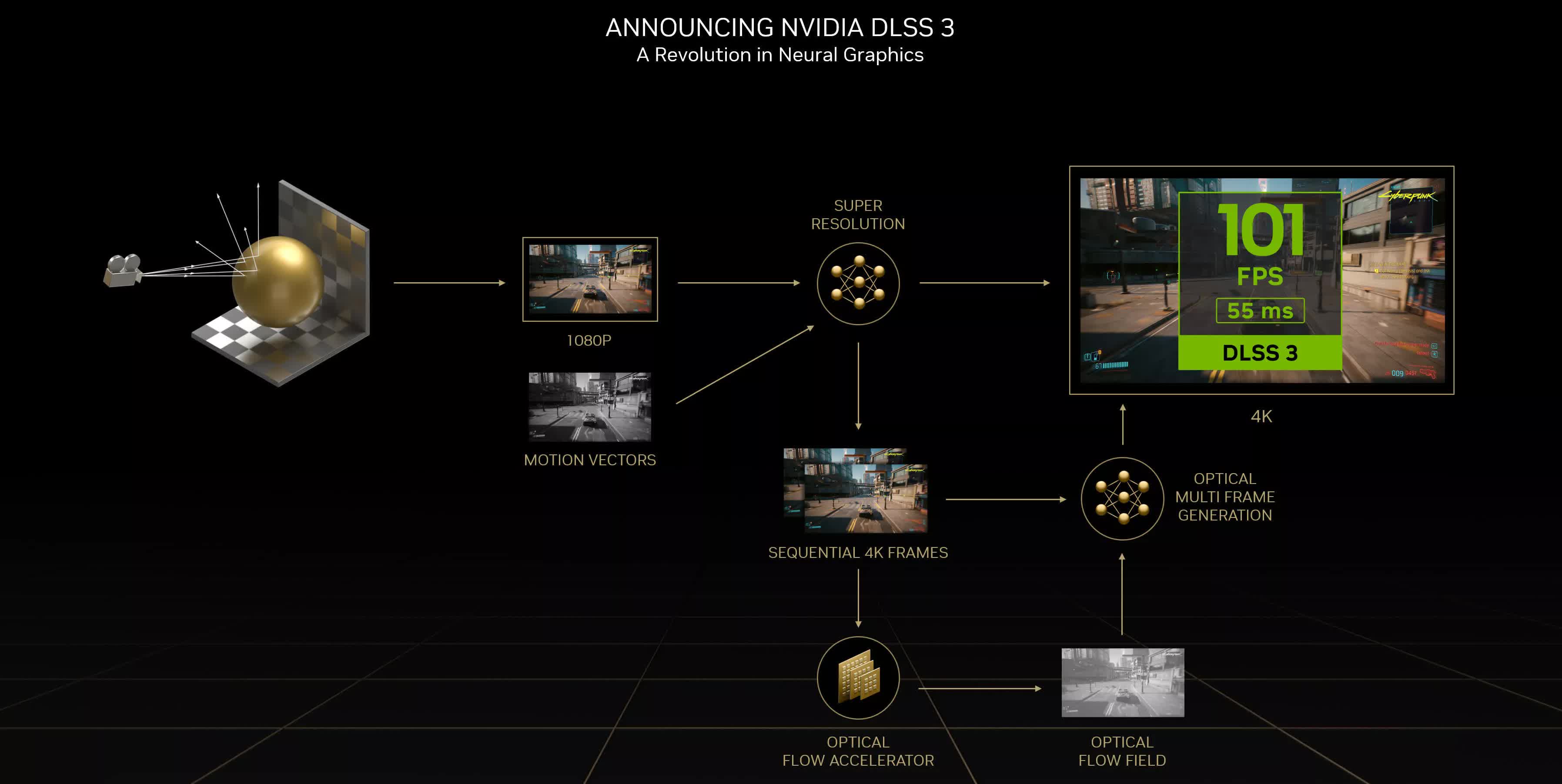

For Turing and the gaming lineup of its models, Nvidia utilized the power of deep learning to develop an upscaling system called DLSS. Although the initial version wasn't particularly good, the current implementation generates great results, and both AMD and Intel have created their own versions (FSR and XeSS).

The premise behind this kind of upscaling is that the GPU renders the frame at a much lower resolution than the one displayed on the monitor, which obviously improves performance. But instead of only increasing the number of pixels, along each axis, to make the final image, a complex algorithm is run instead.

The exact nature of the process is formulated in the world of deep learning, by training the mathematical model using hundreds of thousands of images of what a full-scale, pristine rendered frame should look like.

And it's no longer just about upscaling and modifying an already rendered frame. With the release of the Lovelace architecture (RTX 40 series), Nvidia has shown that DLSS on its third iteration will be able to generate entire frames.

Frame interpolation has somewhat of a poor reputation because of its extensive use in TVs. How this new system will work in practice remains to be seen, but it demonstrates the direction that the technology is heading towards.

Tensor cores aren't necessarily required to perform AI-based upscaling though. Strictly speaking, AMD's FSR (FidelityFX Super Resolution) upscaling system isn't deep learning based. It's based around Lanczos resampling, with the current version applying temporal anti-aliasing afterwards, but the end results are essentially the same: more performance, for a small decrease in visual quality.

And because FSR doesn't require specific hardware units, like tensor cores, its implementation will likely become more widespread than DLSS – especially given the latest gaming consoles all use AMD's CPUs and GPUs.

None of the systems presently in use are perfect, though. The algorithms struggle with fine details, such as narrow wires or grids, resulting in shimmering artifacts around the object. And there's also the fact that all of the upscaling systems require GPU processing time, so the more basic the graphics card, the smaller the boost in performance.

Be that as it may, all new rendering technologies have problems when they first appear – DirectX 11 tessellation, for example, when enabled in the first games to support it, would often have glitches or serious performance issues. Now it gets used almost everywhere with nary a nod or a wink.

So while each GPU vendor has its way of upscaling, the very fact that they all support it (and FSR is supported across a much wider range of graphics cards than DLSS) shows that it's not going to be some fad that disappears. SLI, anyone?

But then, machine and deep learning will be used for more than just upscaling.

AI to power realistic content

The sense of realism in a game world certainly goes beyond graphics. It's about everything within the game behaving in a manner that the player expects, given their understanding of the game's lore and rules. AI can help out here, too. In virtually every single-player game, the responses of computer-controlled characters are handled by a long sequence of 'If...then...else' statements.

Making that seem realistic is a serious challenge – it's all too easy to either have incredibly dumb enemies that pose no threat whatsoever, or ones that have god-like abilities to detect you from a single visible pixel.

The developers at now defunct Google Stadia utilized machine learning to train how a game, under development at the time, should play against you. The input data was in the form of the game playing against itself, the results of which were used to create a better computer player.

Back in 2017, OpenAI, a research and deployment company specializing in machine learning, did a similar thing, making a computer play against itself in thousands of rounds of Dota 2, before pitching it against some of the best (human) players around the world.

This may sound like it would just make all games far too difficult – after all, computers can process data millions of times faster than we can. But different levels of challenge can be generated from the number of neural operations performed during the training.

So developers could create, say, 10 levels of "bot AI" based on 10 different training sessions performed: the shorter the training, the dumber the AI.

Beyond the scope of AI improving how games play and feel, there's game development itself to consider. The process of creating all of the assets for a game (models, textures, etc) is very time-consuming, and as worlds become more complex and detailed, so does the time required to complete this work.

These days, the development of a AAA title requires hundreds of people to dedicate long hours over many years. This is why game developers are looking for ways to reduce this workload and GPU companies are at the forefront of such research.

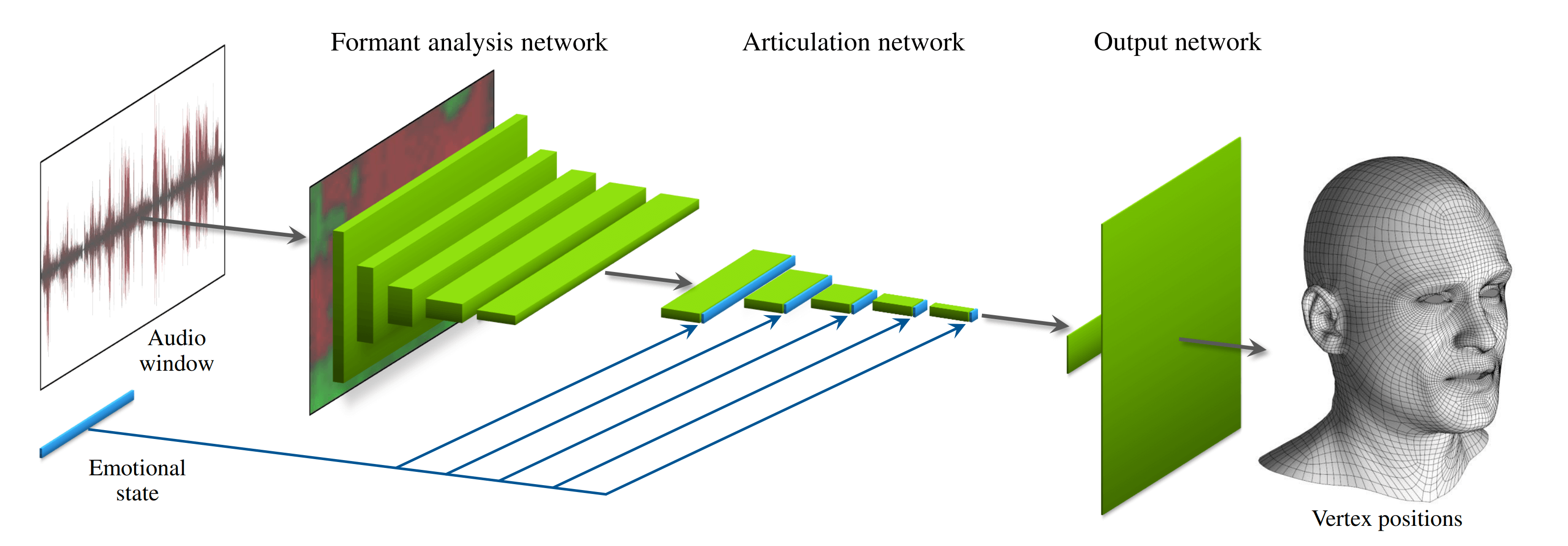

For example, modeling facial animation to match speech can be very time-consuming and expensive.

Companies with money to burn will use motion capture, having the voice actors read out the lines, whereas smaller development teams will have to use specialized software or hand map the animation themselves.

Nvidia and Remedy Entertainment have developed a method, using machine learning, that can produce the required motion of the polygons based entirely on the speech being played. Not only does this speed up one part of the content creation process, but it also offers a solution to companies looking to expand their player base.

Speech localization sometimes doesn't quite work, simply because the facial animations were generated for just one language – switch to another, and the sense of immersion is lost. With the above technique, that problem is solved.

Research on neural radiance fields also shows promise for game developers. The likes of Nvidia's Instant NeRF software can generate a 3D scene, based on nothing more than a few photos and details on how they were taken.

NeRFs can also be used to help generate realistic-looking fur, wool, and other soft materials, quickly and simply. All of this can be done on a single GPU, although the training time required prevents it from being currently usable during gaming.

However, self-learning for smarter enemies or facial animation based on speech isn't something that can be done in real-time, nor on a single GPU. The processing power required to grind through the neural network is currently on a supercomputer level and takes months of training.

But 10 years ago, the thought of having ray tracing in a game was unthinkable.

Changes in future GPUs / 3D hardware

We started this look at the future of 3D graphics with a point about hardware. Fifty years ago, researchers were extremely limited in how they could test their theories and models because the technology at the time simply wasn't capable enough.

Such work was also the preserve of university professors, where their work stayed very much in the world of academia.

While this still happens, graphics development is now firmly in the hands of game developers and GPU designers. Take SSAO, for example – this was created by one of Crytek's programmers, looking for a way to improve shadows in Crysis.

Today, AMD, Intel, and Nvidia work hand-in-hand with universities and game companies around the world, finding new ways of improving the performance and quality of everything graphics-related. Rather than taking decades for a new algorithm to be used in games, it's now just a few years, and in some cases, a handful of months.

But what about the hardware to support it? Will that continue to improve, at the rate it has over the past 30 years, or are we nearing a decline in growth?

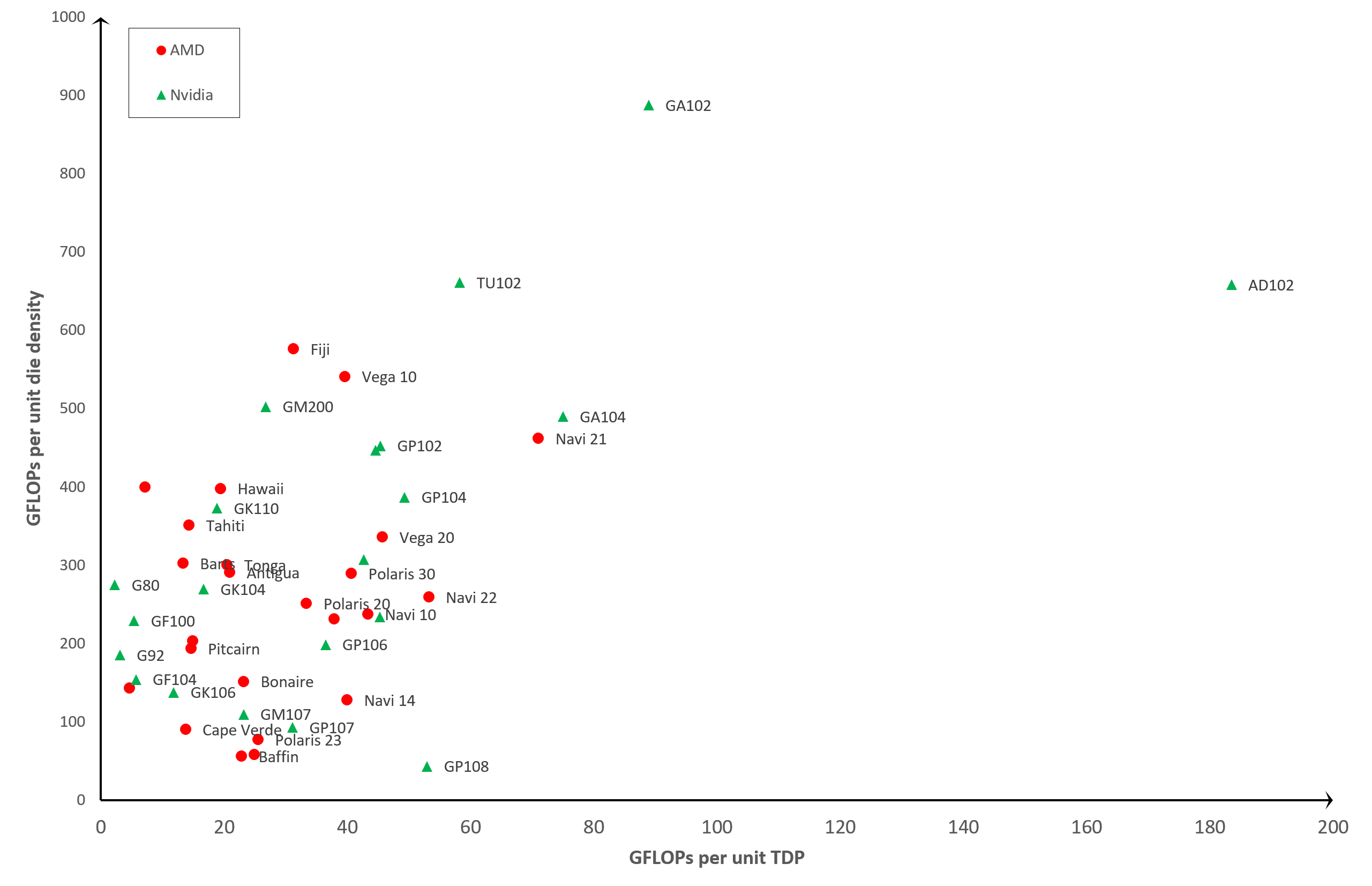

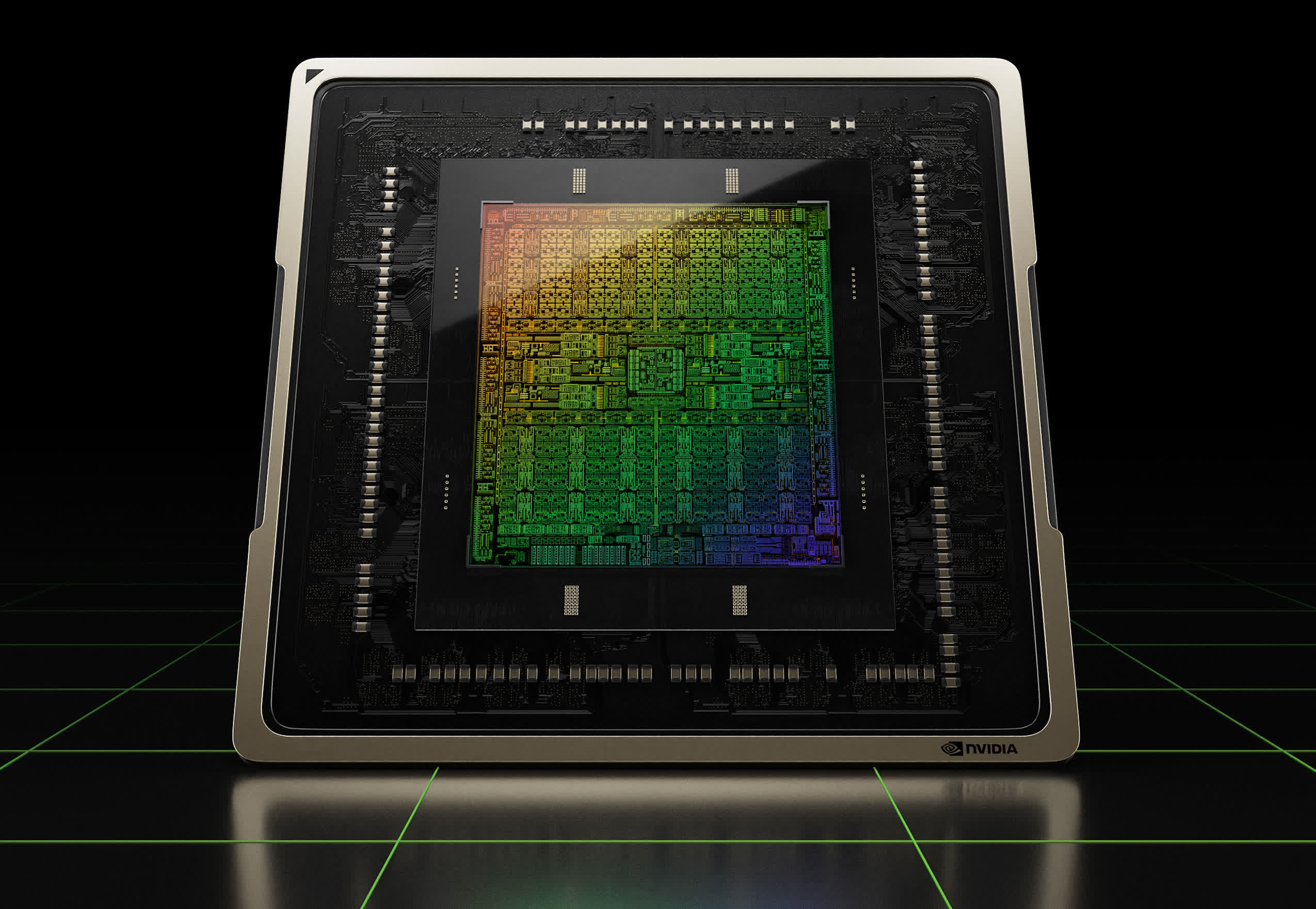

At the moment, there's no sign of a plateau. Nvidia's latest GPU, the AD102, is a huge leap over its predecessor (Ampere's GA102), in terms of transistor count, FP32 throughput, and overall feature set.

The chips powering the Xbox Series X and PlayStation 5 have AMD GPUs equivalent to those found in today's mid-range graphics cards and game development for consoles is often the starting point for research into new performance improvement techniques. Even chip designers for phone deployment are focusing on ramping up the capabilities of their tiny GPUs.

But that doesn't mean the next few generations of GPUs are going to be more of the same, albeit with more shaders and cache. Ten years ago, the top GPUs comprised ~3.5 billion transistors and now we're looking at 76 billion nano-sized switches, with 25 times more FP32 calculating power.

Is that huge increase in transistor count just for more processing? The simple answer is no. Modern GPUs are dedicating increasingly more die space to units specialized in ray tracing and AI applications. These are the two areas that will dominate how 3D graphics continue to evolve: one to make everything more real, the other to make it actually playable.

The future is going to be stunning. Really stunning.