So you're in the market for a new monitor or laptop and you check out the specs for the screen. It has the resolution and refresh rate you're after, but you also notice some models boasting support for HDR.

If you're wondering what exactly is HDR, how it works, and what benefits it can offer, then you've come to the right place. In this article, we cover all the low-down on those three magic letters.

A quick explanation about HDR

HDR stands for High Dynamic Range and is used to label technologies and methods employed in image and video content, display panels, and graphics rendering that increase the difference between the maximum and minimum values of light and color.

This difference, a.k.a. the dynamic range, is how many times higher the maximum value is compared to the minimum. For example, if it involves the light output of a monitor, the dynamic range refers to the contrast ratio (max brightness divided by the min brightness).

These technologies improve the perceived quality of images because the human visual system is capable, under the right circumstances, of discerning light through a vast contrast ratio.

High dynamic range systems help to retain fine details that would otherwise be lost in dark or bright areas, as well as improve the depth and range of colors seen. Static images, movies, and rendered graphics all look far better when presented on a high-quality HDR display.

Technology that utilizes HDR is often more expensive than so-called SDR (Standard Dynamic Range) because they use more complex or harder-to-manufacture parts, as well as using a greater amount of data that needs to be processed and transferred. Now, time to explain this all in more detail...

Understanding digital color

Computers and our favorite tech gadgets create images on screens by manipulating the value of a color channel. These are values used to represent a specific color – specifically, red, green, and blue – and every other color is made through a combination of these three, using what is known as the RGB color model.

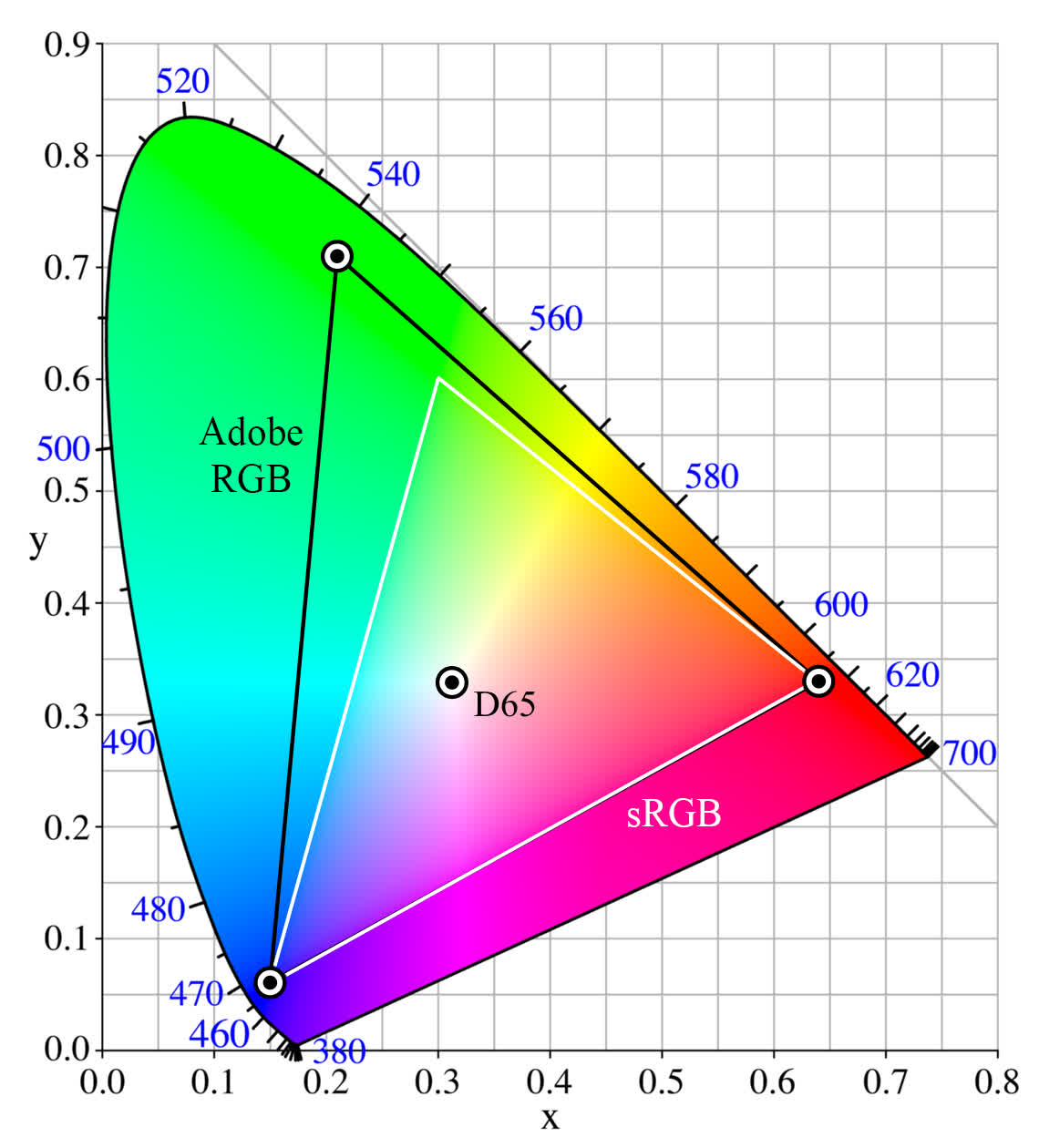

This is a mathematical system that simply adds these three color values together, but by itself, it's not much use. The model needs further information about how the colors are to be interpreted, to account for aspects such as the way the human visual system works, and the result is known as a color space. There's a whole host of different combinations, but some of the most well-known color spaces are DCI-P3, Adobe RGB, and sRGB.

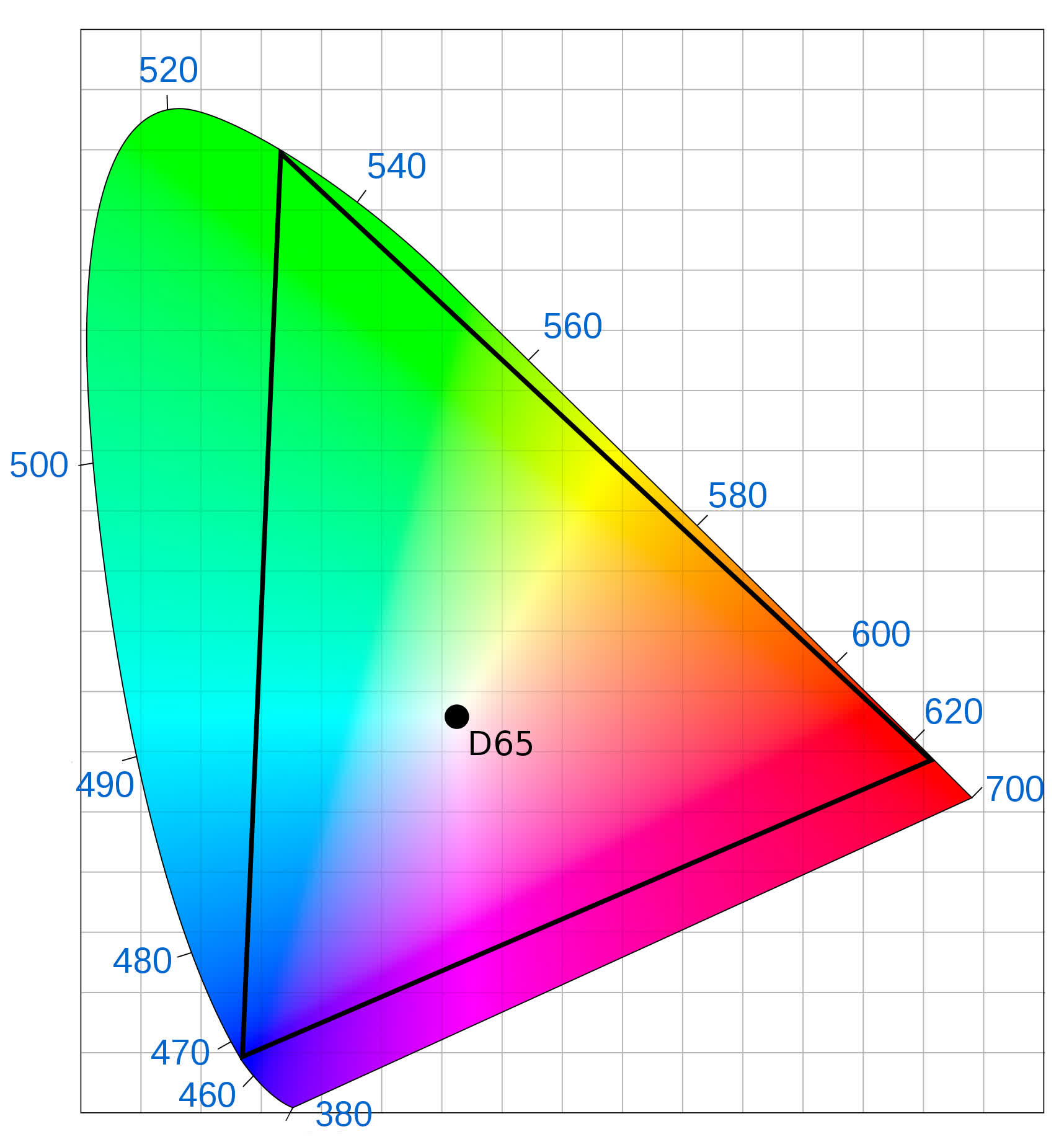

Such spaces are unable to cover every possible color that's discernible by us and the set of colors that it can represent is called a gamut. These are often displayed in what's known as a CIE xy chromaticity diagram:

In our monitor reviews, you'll always see references to these gamuts, with measurements of how much of the gamut gets covered by the display. But since color models, spaces, and gamuts are essentially all just a bunch of math, various systems are needed to convert the numbers into a correct physical representation of the image.

Going through all of those would require another article, but one of the most important ones is called the electro-optical transfer function (EOTF). This is a mathematical process that translates the electrical signals of a digital image or video into displayed colors and brightness levels.

For most of us, we never need to worry about any of this – just plug our monitors and TVs in, watch movies and play games, and don't need to give any of this much thought. However, professional content creators will allocate plenty of time to calibrating their devices, to ensure that images and the like are displayed as correctly as possible.

The importance of color depth

The color channels that are processed in the models can be represented in a variety of ways, such as an arithmetic domain of [0,1] or a percentage value. However, since the devices handling all the math use binary numbers, color values are stored in this format, too.

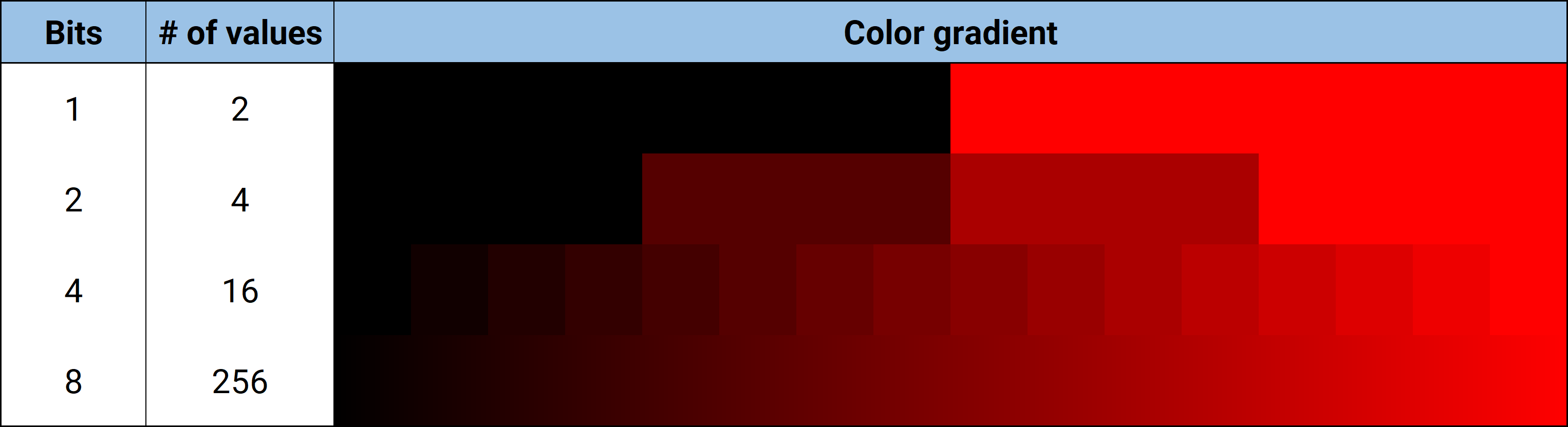

The size of digital data is measured in bits and the amount used is often referred to as the color depth. The more bits that are used, the greater the number of different colors that can be created. The minimum standard these days is to use 8 bits for each channel, and you may sometimes see this written as R8G8B8 or just 888. A single bit provides two values (0 and 1), two bits results in 2 x 2 = 4 values, and so 8 bits give 2 x 2 x ... (8 times) = 256 values.

Multiply these together, 256 x 256 x 256, and you get 16,777,216 possible combinations of RGB. That might seem like an impossibly large number of colors, far more than you would ever need, and for the most part, it is! Hence, why this is the industry norm.

For the moment, let's focus on a single color channel. Above, you can see the difference in how the red channel changes, depending on the number of bits used. Notice how much smoother 8 bits is compared to the others? It might seem to look perfectly okay like this and the need for using more bits just doesn't exist.

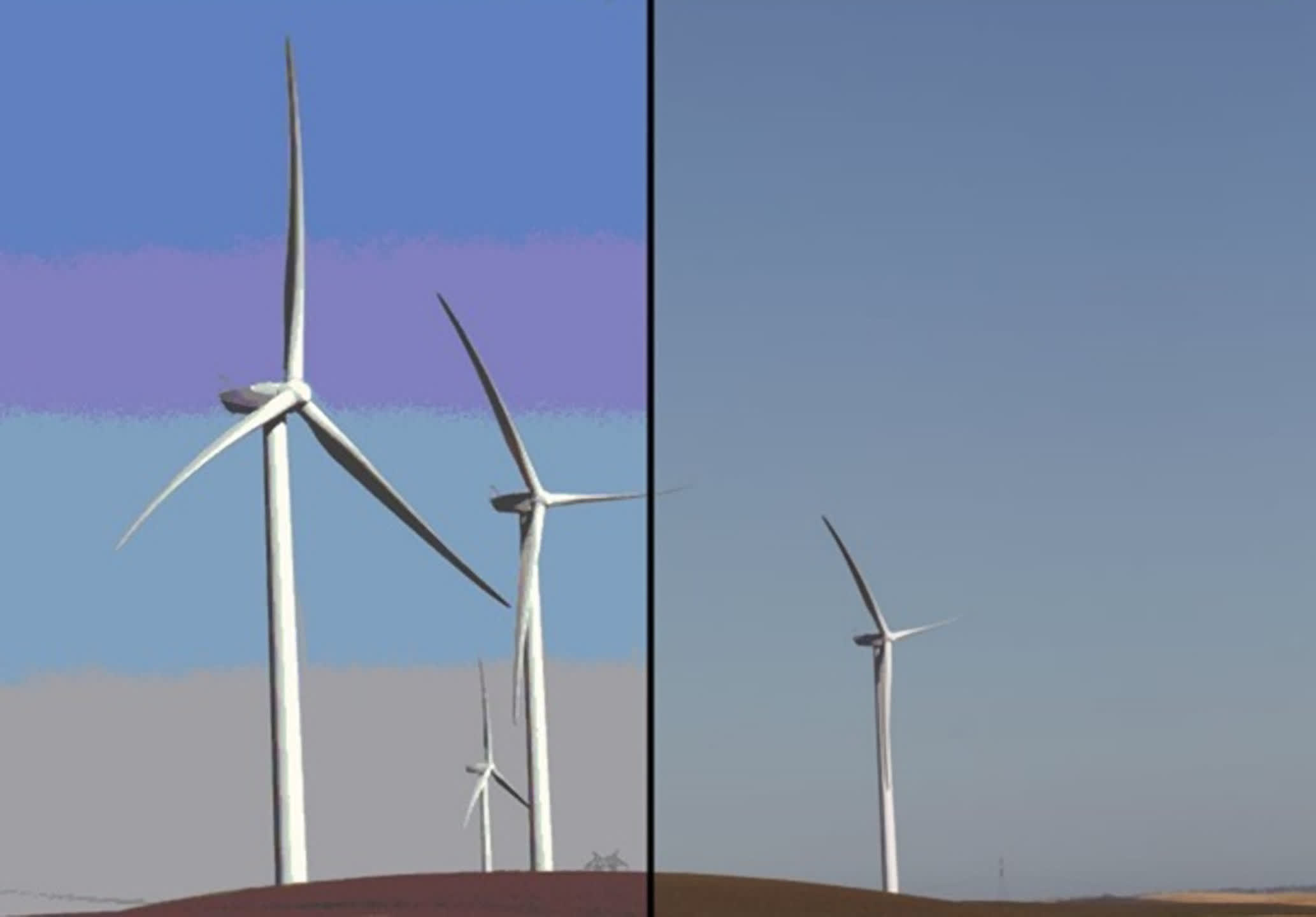

However, once you start blending colors together, 8 bits isn't quite enough. Depending on the image being viewed, it's easy to spot marked regions where the color seems to jump from one value to another. In the image below, the colors on the left side have been simulated using 2 bits per channel, whereas the right side is standard 8 bits.

While the top of the right side doesn't look too bad, a close examination of the bottom (especially near the ground) amply demonstrates the issue. Using a higher color depth value would eradicate this problem, although you don't need to go really big – 10 or 12 bits is more than enough, as even at 10 bits, there would be 1024 steps in the gradient of a color channel. That's four times more color changes than at 8 bits.

Using a larger color depth becomes even more important when using a color space with a very wide gamut. sRGB was developed by Hewlett Packard and Microsoft over 20 years ago, but is still perfectly suitable for the displays of today because they nearly all use 8 bits for color depth. However, something like Kodak's ProPhoto RGB color space has a gamut so large that 16-bit color channels are required to avoid banding.

How displays make images

The majority of today's computer monitors, TVs, and screens in tablets and phones all use one of two technologies to generate an image: liquid crystals that block light (a.k.a. LCD) or tiny diodes that emit light (LEDs). In some cases, it's a combination of the two, using LEDs to create the light that the LCD then blocks out.

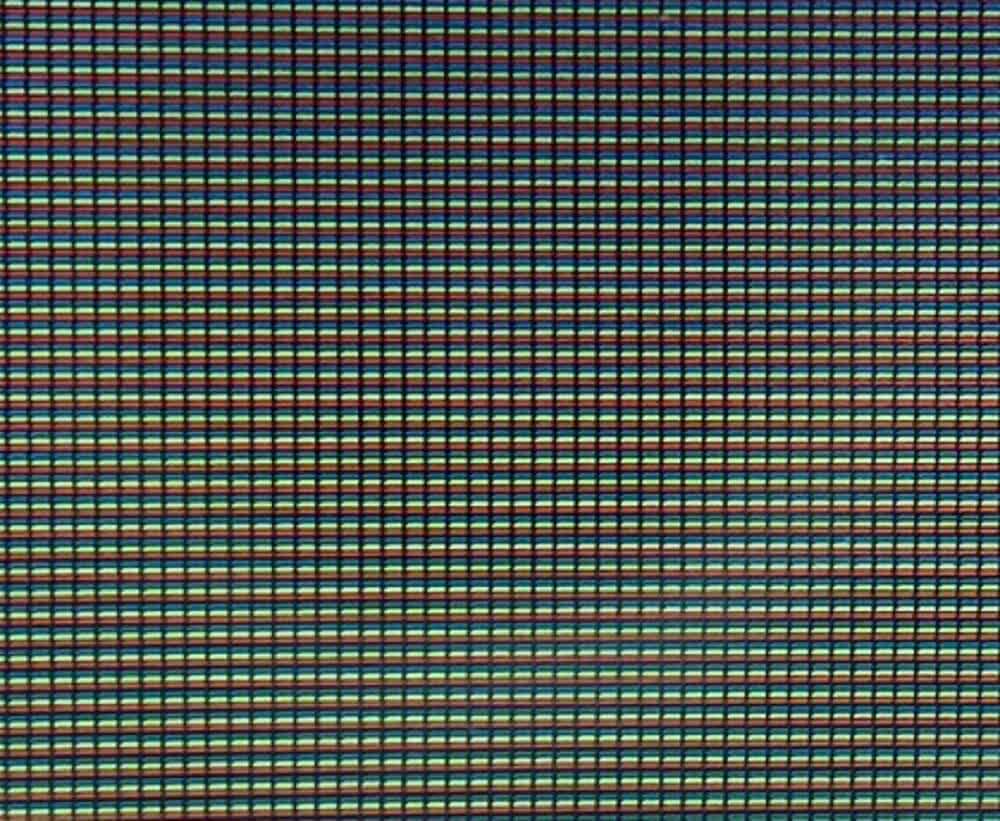

If you take a look at some of the best monitors you can buy at the moment, the majority of them have LCD panels inside. Break one of these apart and take a close look at the screen, and you might see something like this.

Here you can clearly make out the individual RGB color channels that comprise each pixel (picture element). In the case of this example, each one is actually a tiny filter, only allowing that color of light to pass through. Screens that use LEDs (such as the OLED panels in top-end monitors or expensive phones) don't need to use them, as they make the light color directly.

No matter what technology is used, there is a limit to how much light can be passed through or emitted. This quantity is called luminance and is measured in nits or candela per square meter. A typical monitor might have a peak luminance of, say, 250 nits – this would be achieved by having all of the pixels set to white (i.e. maximum values for each RGB channel).

However, the minimum value is somewhat harder to pin down. LCD screens work by using liquid crystals to block light from coming through but some light will always manage to sneak its way past the crystals. It might only be a tiny amount of luminance, perhaps 0.2 nits or so, but recall that the dynamic range is the ratio between the maximum and minimum values for something.

If the max is 250 and the min is 0.2, that's a dynamic range of 250/0.2 = 1250. As it is very hard to lower the minimum luminance in LCD screens, manufacturers improve the dynamic range by increasing the maximum luminance.

Screens that emit light, rather than transmit it through crystals, fare much better in this aspect. When the LEDs are off, the minimum luminance is so low that you can't really measure it. This actually means that displays with OLED (Organic LEDs) panels, for example, theoretically have infinite contrast ratios!

HDR formats and certification

Let's take a mid-priced computer monitor – the Asus TUF Gaming VG279QM. This uses an LCD panel that's lit from behind using rows of LEDs, and the manufacturer claims it to be HDR capable, citing two aspects: HDR10 and DisplayHDR 400.

The first one is a specification for a video format, created by the Consumer Technology Association, that sets out several technical aspects for the color space, color depth, transfer function, and other elements.

Where sRGB uses a relatively simple gamma curve for the transfer function, the HDR10 format uses one known as the Perceptual Quantizer (PQ) and is far more suitable for content with a high dynamic range. Likewise, the color space (ITU-R Recommendation BT.2020, shown below) for this format also has a wider gamut than sRGB and Adobe RGB.

Additionally, the specification also requires the color depth to be a minimum of 10 bits to avoid banding. The format also contains fixed metadata (further information about the whole video the display is showing, to allow the display to adjust video signals) and support for chroma sub-sampling 4:2:0 when using compression.

There are multiple other HDR video formats (e.g. HDR10+, HLG10, PQ10, Dolby Vision) and they differ in terms of licensing cost, transfer function, metadata, and compatibility. The majority, though, use the same color space and depth.

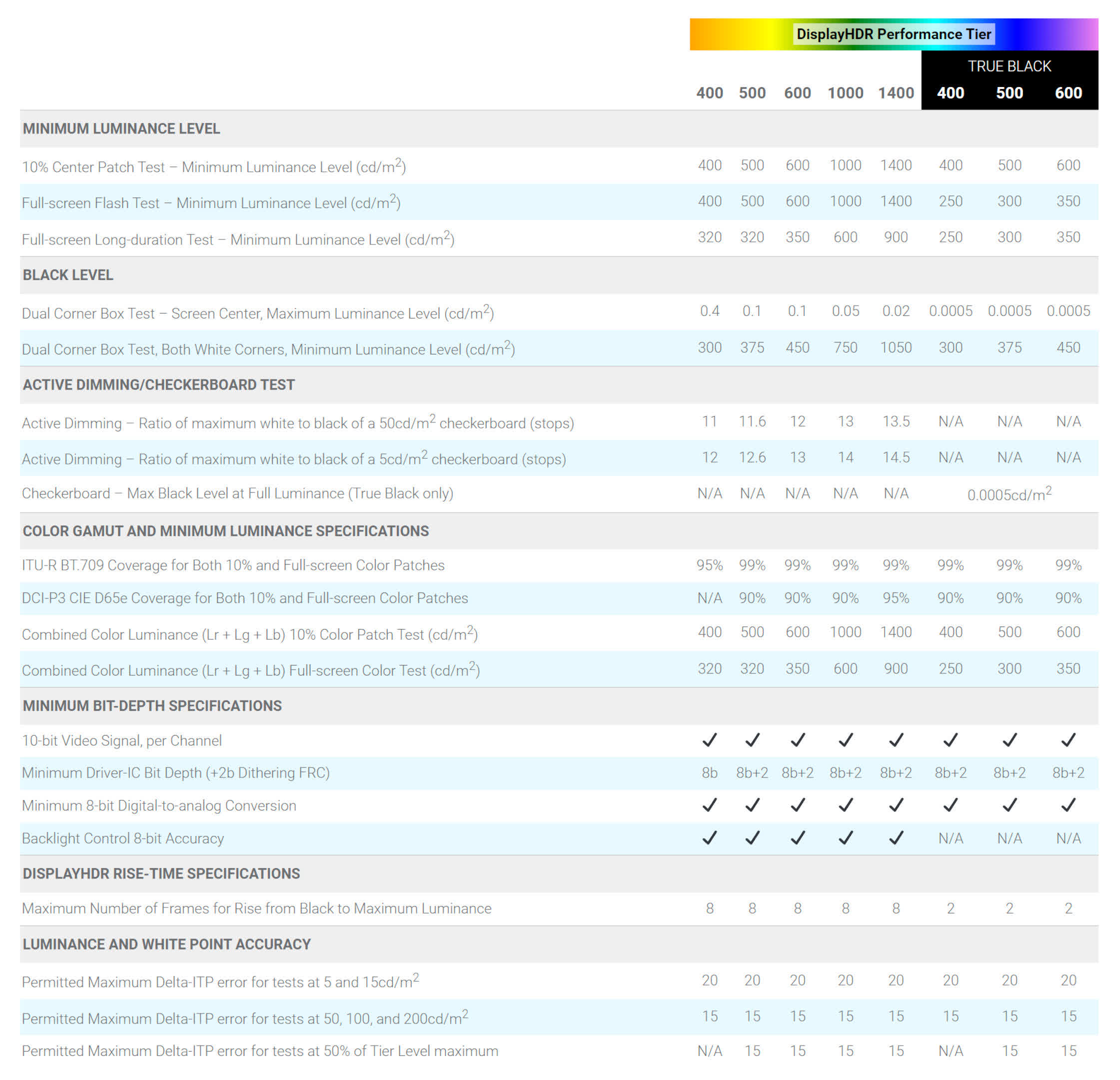

A quick glance shows us that a DisplayHDR rating of 400 is the lowest one you can get and, in general, any monitor with this rating isn't especially good at HDR

The other HDR label (DisplayHDR 400) on our example monitor is another certification, this time by VESA (Video Electronics Standards Association). Where HDR10 and the others are all about content, this one covers the hardware displaying it.

As this table demonstrates, VESA requires manufacturers to ensure their monitors to have certain requirements concerning luminance, color depth, and color space coverage before they can apply for the certification.

A quick glance shows us that a DisplayHDR rating of 400 is the lowest one you can get and, in general, any monitor with this rating isn't especially good at HDR, though if you've never experienced anything better, you may still find it perfectly okay.

The world of HDR formats and certification is rather messy. It's perfectly possible to have a fantastic display that can show content using various HDR formats but isn't certified by either VESA or the UHD Alliance (another standards body). Conversely, one can have a monitor with multiple certifications but isn't especially good when showing high dynamic range material.

As things currently stand in the world of technology, there's only one display type that we would recommend for an excellent HDR experience, and that's OLED.

As things currently stand in the world of technology, there's only one display type that we would recommend for an excellent HDR experience, and that's OLED. The use of quantum dots in LED panels greatly improves their luminance and color space coverage, but nothing (yet) hits the dynamic range of an OLED panel. Ideally, you want one with an average luminance of 1000 nits, though higher is better (and probably more expensive).

Movies and HDR

If you want to watch the latest films with high dynamic range, then you're going to need three things – an HDR TV or monitor, a playback device that supports HDR formats, and the film in a medium that's been HDR encoded. Actually, if you're planning on streaming HDR content, then you're going to need one extra thing, and that's a decent internet connection.

We've already covered the first one, so let's talk about devices that play the movies, whether it's a Blu-ray player or a streaming service dongle. In the case of the latter, almost all of the latest devices from Amazon, Apple, Google, and Roku support various formats – only the cheapest models tend not to have it.

For example, the $40 Roku Streaming Stick 4K (above) handles HDR10, HDR10+, HLG, and Dolby Vision. Given that most streaming services use either HDR10 or Dolby Vision, you'd be more than covered with that range of support.

If you prefer to watch films on physical media, then you'll need to check the specifications of your Blu-ray device. Most recent 4K players will support it, but older ones probably won't.

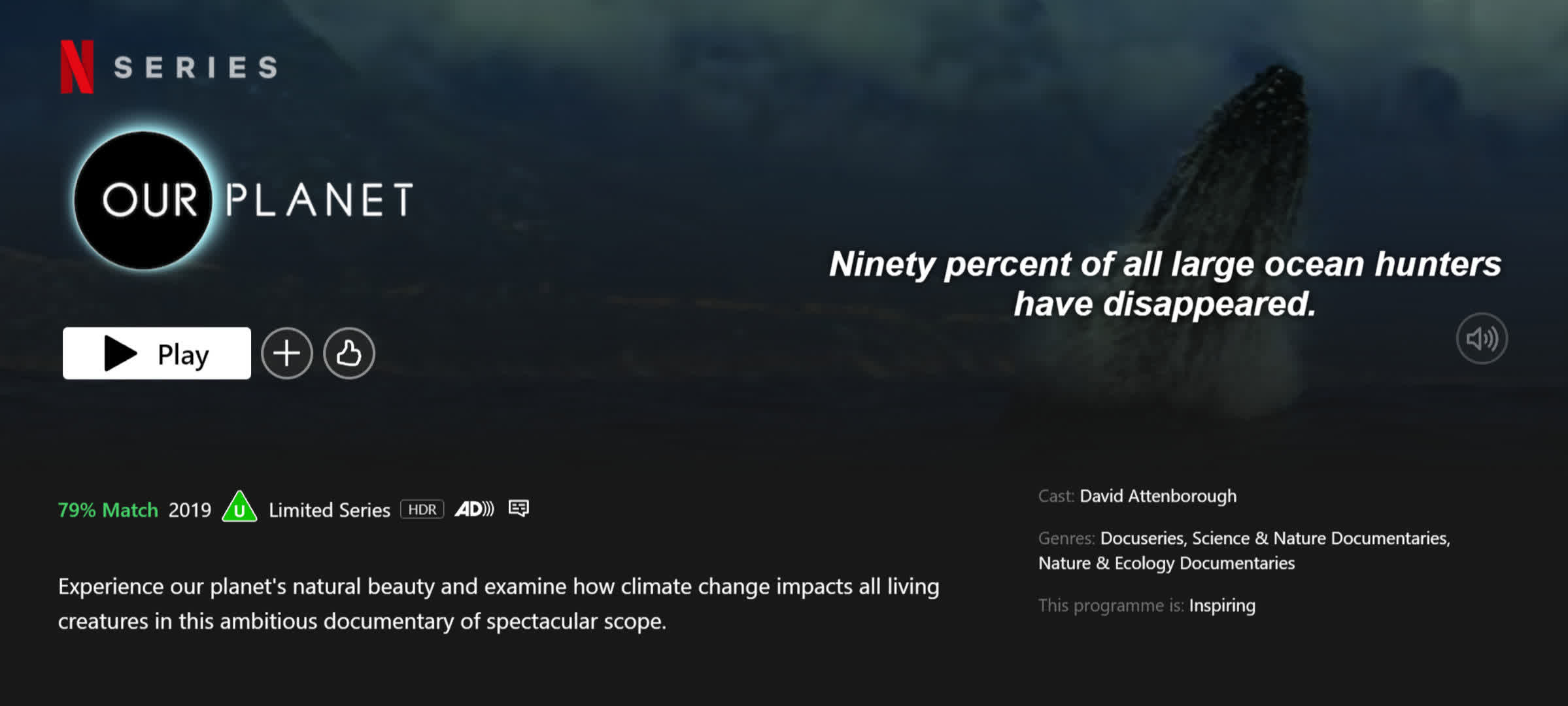

Returning to streaming, the likes of Disney Plus, Netflix, and Prime Video do offer content that's been encoded in one of the HDR formats, but more often than not, you'll need to hunt through the various menus to find them; you'll probably also need to be paying extra for it, too.

Note that if you want to watch a streamed HDR movie on your PC, then be prepared for a lot of hassle. Your graphics chip and display combination will need to support HDCP 2.2 and in Windows, you will need to have HEVC codecs installed. Then, there's the annoyance that Netflix only supports 4K HDR streaming in Microsoft Edge and their Windows app; Disney Plus and Prime Video will work in most browsers, though.

It can be such a struggle to get it to work properly, that it's often a lot easier to just stream using a dongle plugged into the HDMI port of your HDR-capable monitor.

HDR in video games

In the early days of 3D rendering in video games, graphics chips did all of the calculations using 8-bit integer values for each color channel, storing the completed image in a block of memory (called the frame buffer) with the same color depth.

Today's GPUs do most of that math using 32-bit floating point numbers, with the frame buffer usually being the same. The reason for this change was to improve the quality of the rendering – as we saw earlier in this article, using a color depth that's too low results in prominent banding. The use of high-precision numbers also enables game developers to render their game with a high dynamic range.

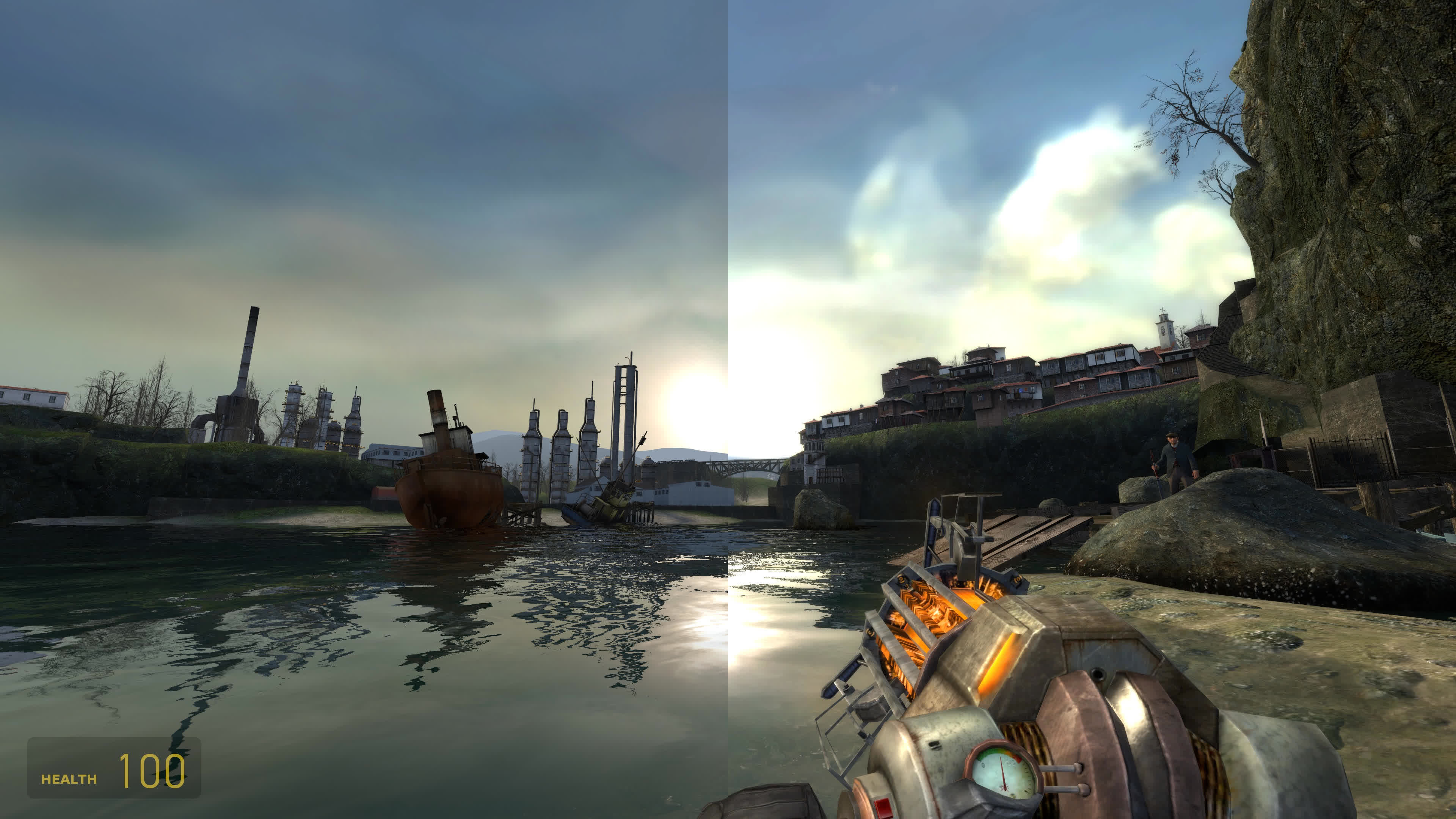

The ability to do this has been around for 20 years and one of the first games to make use of HDR rendering was the Lost Coast expansion to Half-Life 2. Below we can see how a typical frame looks with standard rendering (left) compared to HDR (right).

As a first attempt at this new technology, Lost Coast appears over-exaggerated, with bloom and exposure levels being somewhat excessive. However, the expansion was primarily designed as a demonstration of new features in the rendering engine.

Pick any big 3D game now and they'll all be using HDR rendering, even if you don't have a monitor capable of supporting it. In such cases, the completed frame needs additional processing (tone mapping) to convert its format into one suitable for an SDR display.

Relatively few games offer direct support for HDR monitors – in some cases, it will be nothing more than an on/off toggle, whereas others may provide additional settings to adjust the frame processing to best suit the display's capabilities.

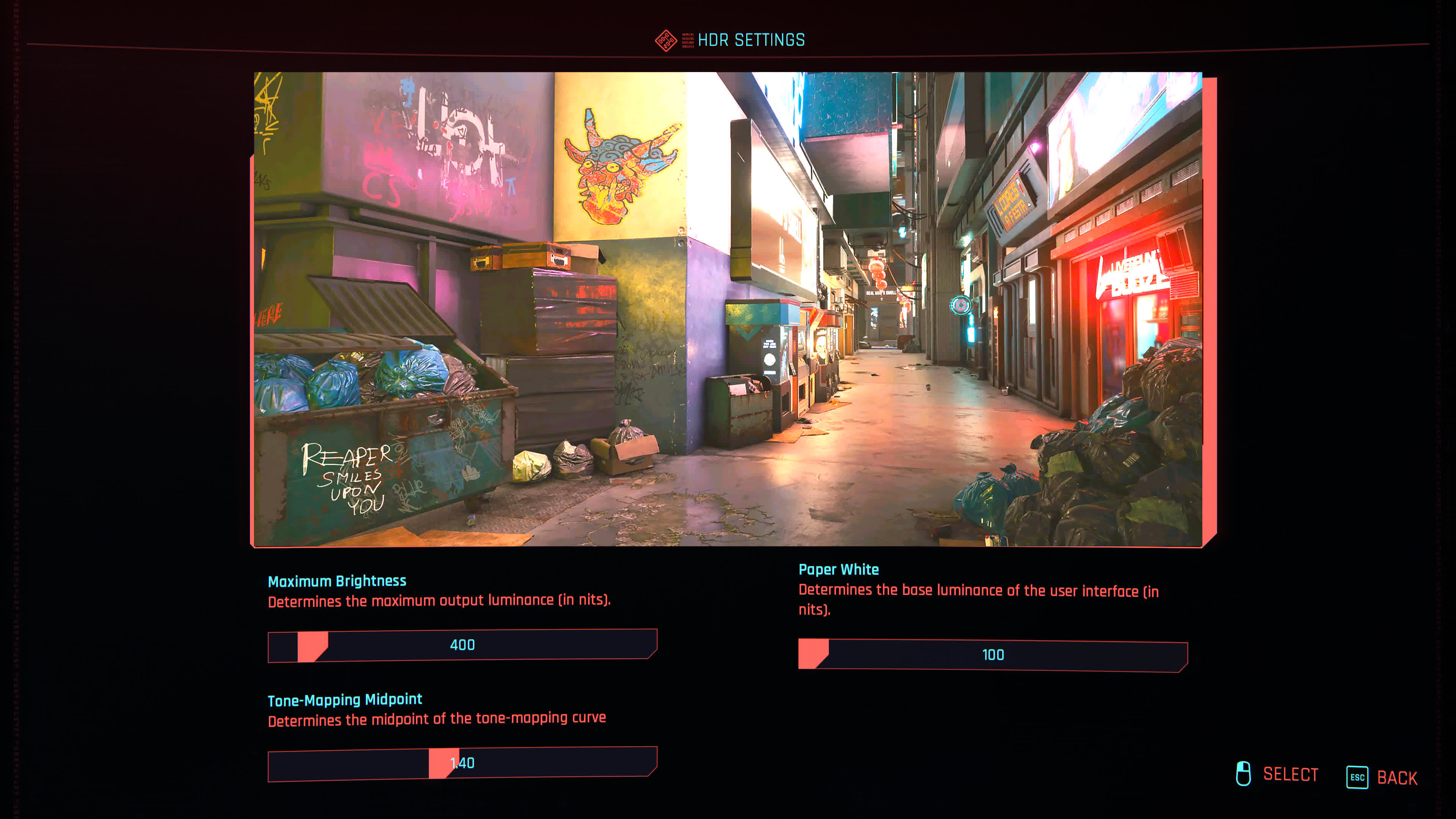

For example, Cyberpunk 2077 can format the frame buffer so that it complies with the HDR10 requirements but then allows you to adjust the mapping process on the basis of your monitor's luminance levels.

Such HDR offerings are rather sparse, far more so than the range of games supporting ray tracing. Given the improvement in visual fidelity HDR can offer, it's a shame more developers aren't choosing to do this.

One way to get around this in Windows 11 is to use the Auto HDR feature. In games using Microsoft's DirectX 11 or 12 API, enabling this option forces your PC to run an algorithm on an SDR frame buffer, mapping it to an HDR one.

The option can be found in one of two ways: Start Menu > Settings > Display > HDR or by pressing the Windows key + G to bring up the Windows Gaming Bar. The results of Auto HDR are varied, with some games showing virtually no improvement, whereas others are transformed entirely.

It comes down to how many high-precision shaders and render targets are being used before any tone mapping is applied. A simple RTS title is unlikely to display any noticeable change, whereas an open-world game, with lots of realistic graphics, is likely to show improvement.

HDR isn't the norm...yet

With the best HDR experience still the preserve of expensive OLED displays, HDR is still a feature for the few, not the many. Microsoft and other companies have tried to promote its use, but until good hardware with it reaches a price range that millions of users can afford, it's not something that's greatly desired by millions of tech enthusiasts.

While you can get an "HDR" monitor for less than $400, you're likely to be rather underwhelmed by the whole experience – these models just don't have enough of a dynamic range to make details really stand out. Prices are coming down, though, and just as 4K and high refresh rates used to be expensive, there will come a point in the near future when a good HDR monitor will fall into a range that covers every budget.

But at least now you know exactly what HDR is!