We have had a lot of AI news lately – product launches from OpenAI, Google, and Microsoft. Now we are just waiting for Apple and its upcoming WWDC conference. Just last week, we wrote about how much of the industry was anticipating these announcements to showcase what AI could do.

This also got us thinking about ways to frame any rational discussion of the subject of neural network based machine learning, a.k.a. AI. We now loosely see the sector developing in three categories:

- AI as a Feature

- AI as a Product or Platform

- AI as Magic

By "AI as Magic," we are talking about Artificial General Intelligence or conscious computers. As much as certain people think this is imminent, we are not convinced. Maybe this is possible, but we do not think it is coming anytime soon.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

At the other end of the spectrum, "AI as a Feature" is what we often refer to as "under the hood" improvements. These are a large collection of small gains that AI provides to software we were already running. For instance, several SaaS companies have reported 5%-10% reductions in the cost of service delivery by introducing some form of AI into their systems. Facebook has reported similar small gains to its ad-matching algorithms. These are not glamorous, but in aggregate, they provide tremendous value. If AI delivers nothing else, this is already a significant boost.

That leaves the last category where AI becomes a product in its own right. This could evolve further into complete platforms providing a wide array of services and value. This is the realm that the big companies are all fighting over, as evidenced by their demos. At this point, our sense is that they are not quite there yet.

Admittedly, this is a purely subjective assessment based on watching their launch videos, with no hands-on experience. But our metric is very clear – what would we be willing to pay for? The answer is not much. Everything launched so far is emotionally compelling and looks incredible, but what utility does it deliver? From what we can tell, everything is either applicable to a niche in which we are not members – better drawing tools, for instance – or is not quite reliable enough to be trustworthy, such as AI agents.

But these are just the consumer applications. There are many other companies looking to use AI tools to advance their efforts. Some of these may be very promising. We think robotics, for one, seems poised to make major advances with the latest crop of tools. We are not talking about C-3PO humanoids, but new ranges of motion for machines.

This sector is currently hampered by a lack of sufficient training data, but there have been significant advances in synthetic data creation in the last year. These have problems for consumer-facing applications (i.e., models built on hallucinations), but could be very useful in other fields. Another area that looks very promising is AI for drug discovery. We know that all the major (and minor) biotech companies are investing heavily in Nvidia gear. And there are many other examples. We think this area is going to see a lot of experimentation. That will mean many failures and dead ends, but it could also result in striking advances.

And we think that is the key point in all of this. We are still in the very early days of AI systems. There are clear advances coming at a frenetic pace. Ultimately, we are optimistic that these will deliver on the promise, but it is likely to be a messy process.

Nvidia has another strong quarter with demand still far outstripping supply

Meanwhile, the leader in AI hardware has reported strong earnings. It is happening so regularly that things are starting to get boring. But then, CEO Jensen Huang got on the call, and the stock went up more on his commentary.

The numbers: the company reported 1Q25 revenue of $26 billion, up 18 percent from the previous quarter and an astonishing 262 percent from the same period last year, ahead of consensus of $24.5 billion. These are big numbers by any metric. Oh, they also announced a 10 for 1 stock split, catnip for retail investors.

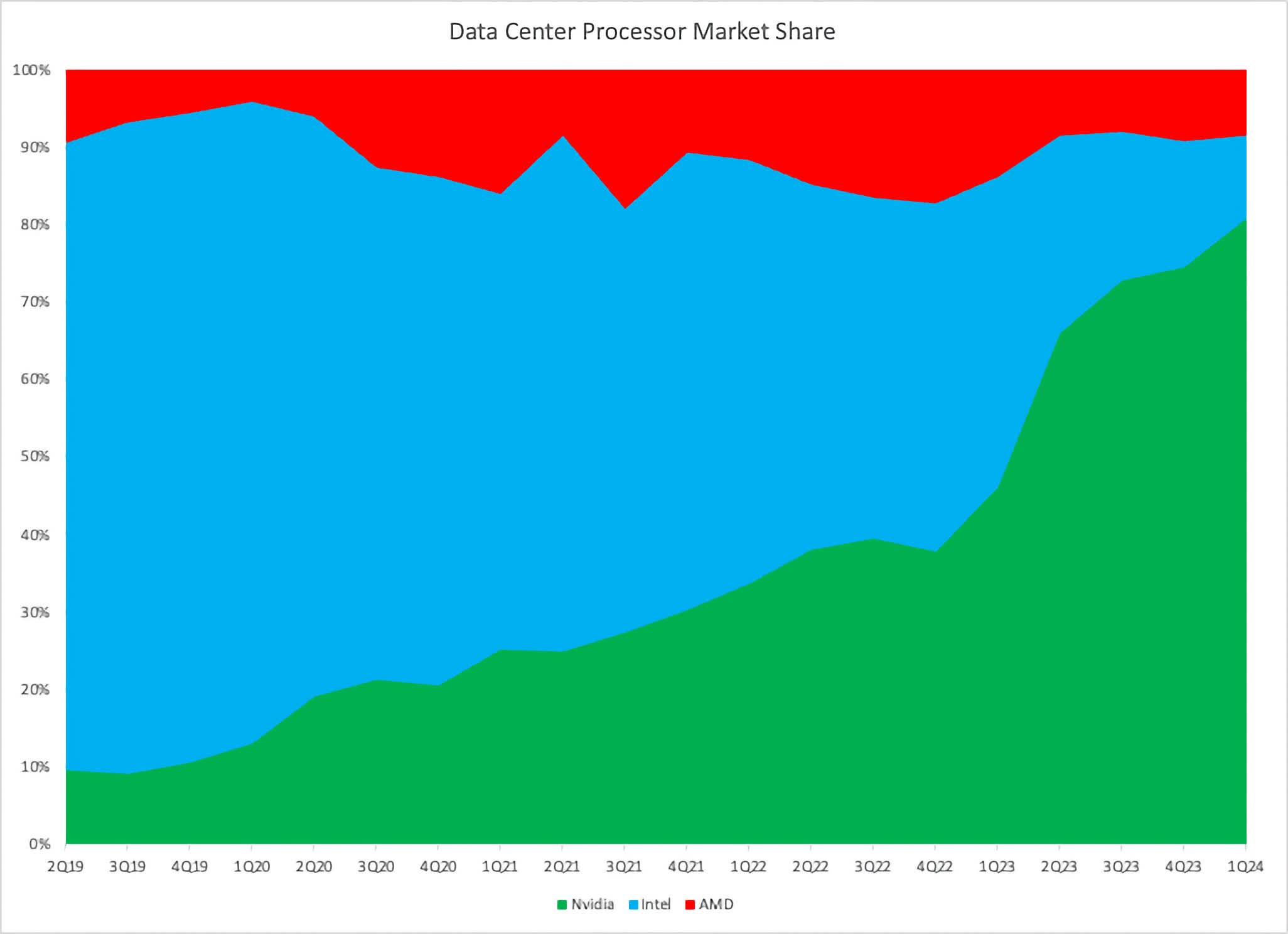

It is hard to grasp how large and how quickly Nvidia has grown. Our preferred metric currently is Nvidia's share of wallet for data center processors, looking at Nvidia, Intel and AMD's reported data center revenue. That figure reached 81% in the quarter. They took a point of share from AMD and five points from Intel.

In fairness, Nvidia's data center revenue includes networking products such as Infiniband. Conveniently, the company began breaking out its data center networking revenue. In theory, we should remove that from the calculation, as neither AMD nor Intel have comparable products.

That being said, that spend is coming from the same set of customers. Even stripping it out only takes their share to 78%. To put that in context, Arista, a company that only sells networking products, did $5.3 billion in revenue for all of 2023.

A few other things that stood out for us. Nvidia claims they are now supplying 100 "AI factories," which are data centers operated independently of the hyperscalers. We wrote about these a few months back, and we remain somewhat cautious about the subject. There is nothing wrong with Nvidia's numbers, but if there are issues, we will likely see them telegraphed from this sector.

It is worth noting that many of these AI factories turn around and re-sell capacity to the hyperscalers, which is a bit circular. We are also uncomfortable with the fact that Nvidia seems to have an extra-heavy hand with these companies. Nvidia is their major supplier, really the basis of their business model in these times of tight GPU supply. Nvidia also seems to sell its whole stack to them – complete systems – and likely designs them as well. On top of all that, Nvidia is likely an investor in many of them.

The company also added some color to its commentary about "Sovereign AI," which they are now defining as "a nation's capabilities to produce AI using its own infrastructure."

The emphasis this quarter was on AI factories built in conjunction with telecom networks, such as Japan's $740 million investment in a project led by telco KDDI, Sakura Internet, and Softbank to build up that country's "AI Infrastructure." This is another area that makes us a bit uncomfortable. A market segment built on governments building AI capabilities feels a bit too vague. On the other hand, it is clearly generating a lot of revenue for the company.

Probably the most asked-about topic on the call was the extent of demand. The company indicated they expect demand to outstrip supply well into next year. Going into the call, there were concerns that the company's new Blackwell line of GPUs would cannibalize sales of the previous generation Hopper chips. The short answer is that there does not seem to be a problem, with the company becoming even more supply-constrained for certain Hopper products during the quarter.

When asked how customers feel about current purchases when they see new products coming to market, Huang replied they will "performance average their way into it." This is his way of saying customers will buy whatever they can get their hands on. We actually heard a better explanation today: customers know Nvidia's roadmap and are building plans around that. They will use B200 when they can get some and H100 when they can't.

We should also point out that if we turn this argument around, Nvidia is developing products at an enviable pace and deserves credit for the advances they are driving. Of key importance here, Nvidia has to contend with the slowdown in Moore's Law as much as anyone else, but they are still driving performance gains by pulling every lever they can. This includes their comprehensive design of data centers and their complete systems approach. This could end up being a strong force working in the company's favor over the long term, as currently no one else (outside the top hyperscalers) has the ability to pull all those levers.

All in all, Nvidia continues to execute strongly on all cylinders. We know where to look for problems, but there are currently no signs of trouble on the horizon. Demand remains very strong, and the company looks set to outpace the competition for the foreseeable future.