Over the past few weeks we've been looking at DLSS 2 and FSR 2 to determine which upscaling technology is superior in terms of performance but also in terms of visual quality using a large variety of games. On the latter, the conclusion was that DLSS is almost universally superior when it comes to image quality, extending its lead at lower render resolutions.

But one thing that's often said by many is that DLSS (and potentially other upscalers) deliver "better than native" image quality, giving gamers not only a performance boost relative to native rendering, but just a generally superior experience visually. Today we're going to find out if this is at all true.

We'll be reviewing a selection of games in detail with some accompanying video before going through our full 24 game summary. We have examined all games using three configurations: 4K using Quality mode upscaling, 4K using Performance mode upscaling, and 1440p using Quality mode upscaling.

We feel these are the most relevant use cases for upscaling. We dropped 1440p Performance mode to save us some time and because honestly that configuration is not very impressive relative to native rendering.

But before diving into the analysis, it's probably best to define what we mean with native rendering: rendering at the native resolution of a given output resolution, so for a 4K output this means a 4K render resolution with no scaling. In contrast, using Quality mode upscaling renders a 4K output using a 1440p render resolution, and Performance mode upscaling uses a 1080p render resolution for 4K output.

This also means we're using the game's highest quality built-in anti-aliasing technology, which is typically TAA. Upscalers like DLSS and FSR replace the game's native anti-aliasing as they perform both upscaling and anti-aliasing in the one package. So for native rendering we are going to use built-in upscaling like TAA, avoiding low quality techniques like FXAA where possible, as well as avoiding stuff like DLAA which isn't the focus of this feature.

All games were tested using their most up to date version as of writing, so we're checking out brand new, fresh comparisons. However, we are using the version of DLSS and FSR included with each game – that means no DLSS DLL file replacement, and no modding games to introduce DLSS or FSR support where there is none – just the existing native use of upscaling in these games.

DLL file swaps and mods are popular in the enthusiast community to take upscaling to the next level or bring in new features, but this article is about both the image quality and implementation. However we'll touch on this a bit in the conclusion, as for DLSS vs. Native it is an important consideration.

All video captures were taken on a Ryzen 9 7950X test system with a GeForce RTX 4080 graphics card, 32GB of DDR5-6000 CL30 memory, and MSI X670E Carbon WiFi motherboard. All games were tested using the highest visual quality settings unless otherwise noted, with motion blur, chromatic aberration, film grain and vignetting disabled.

Ray tracing was enabled for some games that could deliver over 60 FPS natively at 4K. We've also equalized sharpening settings between DLSS and FSR in all games where possible, to deliver similar levels of sharpness. This may not mean the same sharpness setting number in the game, but the same resulting sharpness, or as close as we could get it.

Let's get into the testing...

Spider-Man Miles Morales

Let's start with Spider-Man Miles Morales. This is a clear example where native rendering is obviously superior to upscaling. This game has excellent built-in TAA which delivers a high level of image stability, good levels of sharpness without being oversharpened, and plenty of detail.

Right during the opening scene we see that the native 4K presentation has superior hair rendering on Miles' coat, with less shimmering in motion and great levels of clean detail. This is true even using Quality mode upscaling...

For live image quality comparisons, check out the HUB video below:

This game also has high quality texture work, which is less prone to moire effect artifacts when looking at the native presentation versus upscaling. The shirt Miles is wearing here is clearly rendered better using native TAA. There were a couple of instances where the TAA image was the only version to correctly render the depth of field effect. And generally when swinging around the city there wasn't a huge difference between DLSS and TAA image stability, with FSR clearly inferior in this regard.

All of these issues are exaggerated when comparing native rendering to the DLSS and FSR performance modes. Hair shimmering is even more obvious. Moire artifacts are more prevalent and more obvious. Depth of field effects break more often. The image is less stable than native. But it doesn't totally break the experience using the Performance upscaling modes, they are still usable, you just have to accept it's at reduced image quality versus native.

A lot of the issues we talked about here are also present at 1440p using the Quality mode, but the biggest difference I spotted compared to testing at 4K is that the native presentation is a lot softer here and less detailed.

Both DLSS and FSR are capable of producing a sharper and more detailed image at times, however this is offset by worse image stability in many areas. I would give a slight edge to native rendering here as its stable presentation is more playable in my opinion, but it was interesting to see that upscaling is actually a bit closer here than at 4K, at least in this game.

The Last of Us Part I

The Last of Us is a great example of the latest upscaling technologies as it uses both DLSS 2 version 3 as well as FSR 2.2. It's also a highly detailed game with high quality textures using ultra settings, which can throw up a few issues for upscalers. I found it very difficult to call it one way or another when comparing native rendering to DLSS's Quality mode at 4K.

The native image is a little sharper and more detailed, however the DLSS image generally has slightly better image stability. A bit less shimmering in foliage heavy scenes as shown in the video, although the difference is much less pronounced than DLSS vs FSR; and also better stability for the fencing issue we exposed in a previous video.

Click on the video to jump to the scene described on this section.

The major difference I spotted was with particle effects. Dust and spores look significantly better rendered natively at 4K, with far better fine detail, and just simply more particles being rendered. Given that otherwise DLSS and native are quite close, this difference tips me slightly in favor of native rendering at 4K.

The 4K Performance mode is not able to get as close to the native presentation, but I was quite impressed with its image stability in foliage heavy scenes like this forest. The issue with particles gets much more pronounced though, it's clear that the native presentation is rendering at a much higher resolution which has a big impact on image quality. Again, I still think the DLSS 4K Performance mode is usable, but it is not able to deliver native or better than native image quality.

The Last of Us Part 1 is another example where I felt the battle between native and upscaling is closer at 1440p than it is at 4K. For example, the particle issues aren't as obvious at 1440p, there is still a difference, you can definitely still tell native rendering is superior, but the gap has closed.

However, in other areas, I feel DLSS takes the lead for image stability. For example, the difference in stability between native 4K and native 1440p in the forest area is very obvious – native 1440p is a large downgrade, while DLSS 1440p Quality mode is able to deliver a more stable image with less shimmering. It seems that like in Spider-Man, the native TAA implementation is not as effective at 1440p as it is at 4K, which makes me inclined to give DLSS a slight edge here.

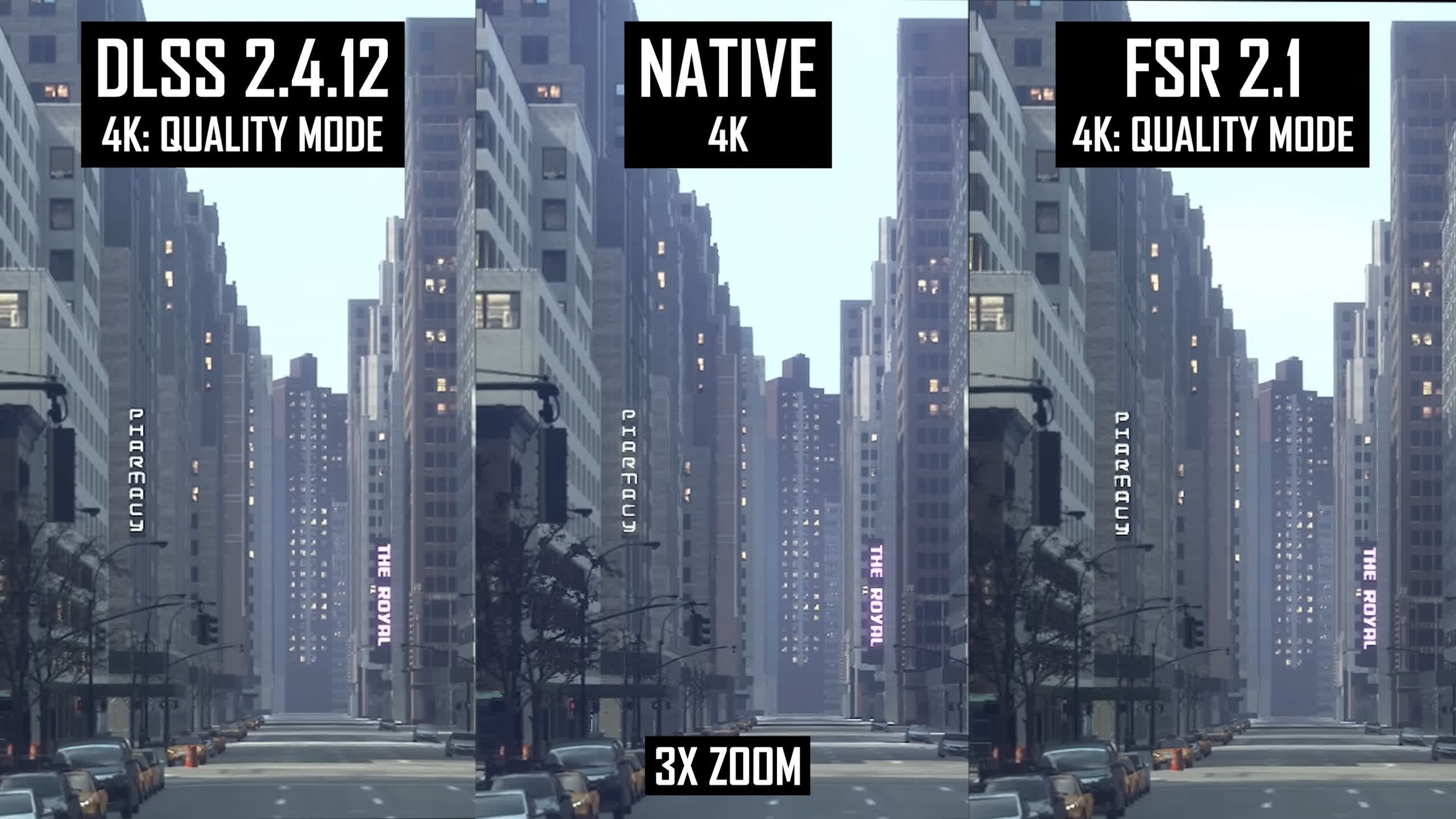

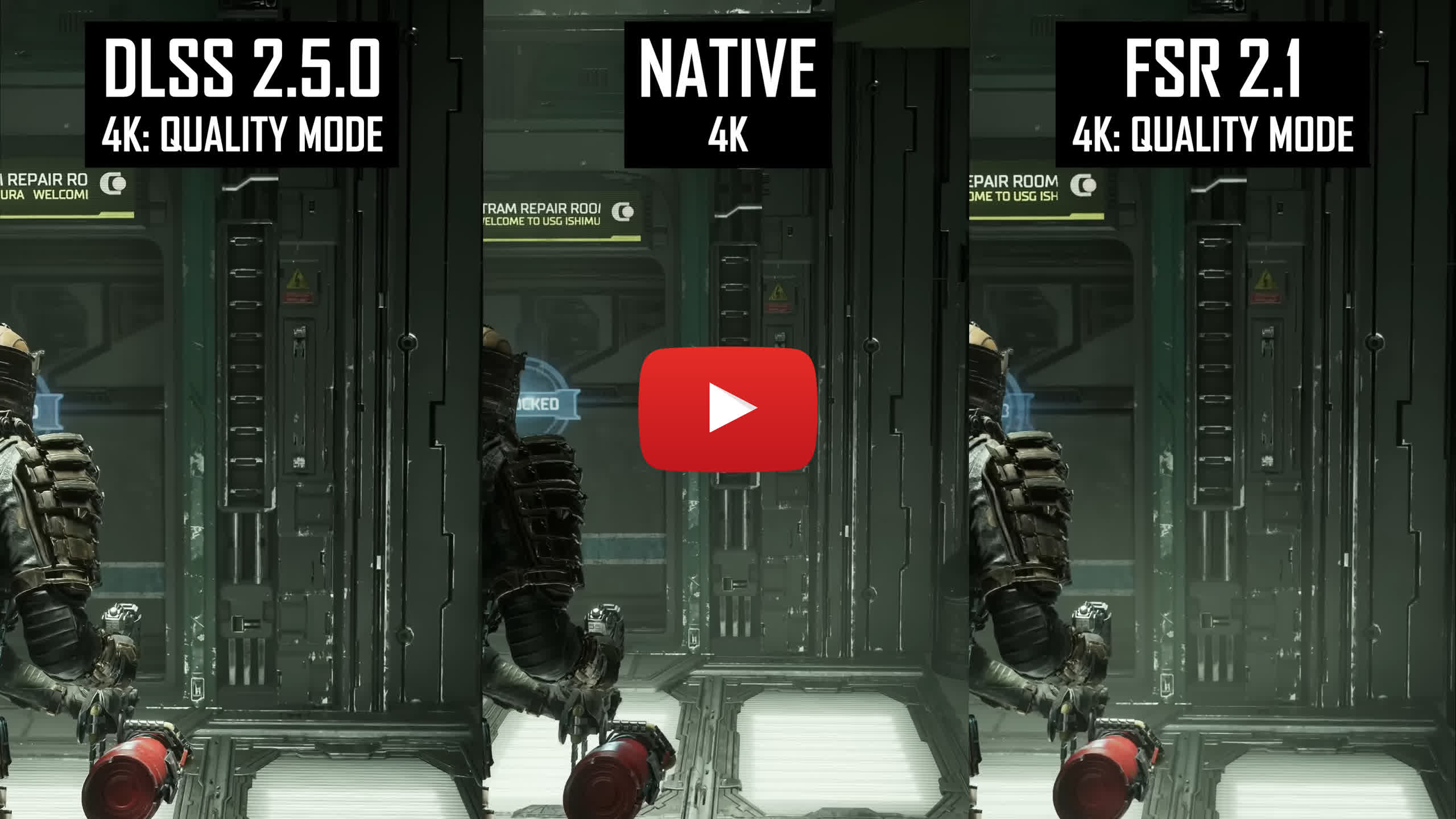

Dead Space

Dead Space returns in this comparison as we had previously found a few issues with the DLSS and FSR implementations at 4K using the Quality mode – namely ghosting in the DLSS image, and shimmering in the FSR image.

At least when it comes to ghosting, the native presentation has a clear advantage over DLSS in this title, however it's actually the FSR 2.1 image that has the least amount of issues in this scene. It's not all great news for native 4K though, as the built in TAA implementation has worse image stability for oblique textures and geometry than DLSS.

In this scene flickering is more obvious in the native image than DLSS, however, in general the native image also has slightly more detail and is sharper, so combined with the ghosting advantage we'd say native is slightly better, but it's close.

Click on the video to jump to the scene described on this section.

Native 4K is moderately better than 4K using the DLSS Performance mode. The image has superior sharpness and clarity, and much less ghosting. There are still times where DLSS is able to reduce texture flickering compared to native TAA, but in my opinion this is not enough of an advantage in its favor.

At 1440p ghosting is reduced in the DLSS Quality image compared to at 4K, however native 1440p still has the edge in this area. The flickering comparison shows DLSS as having reduced stability compared to 4K, however it still is ahead of native 1440p here though only slightly. DLSS is also noticeably softer than native 1440p, though overall I'd say the native image only has a small edge in image quality

Dying Light 2

In Dying Light 2 it was very difficult to tell the difference between DLSS Quality upscaling at 4K, and the game's native 4K presentation. There are a few areas where each method trades blows – DLSS has slightly more particle ghosting than native, but slightly better fine detail reconstruction – but realistically speaking there's no significant win either way. This means that DLSS in Dying Light 2 is indeed as good as native rendering, so it's well worth enabling for that performance benefit.

Click on the video to jump to the scene described on this section.

When looking at the Performance upscaling mode, native rendering does have a slight advantage but honestly it's still quite close to native rendering. A bit more shimmering in the Performance mode image is the main difference relative to the Quality mode, which makes it fall that bit behind native, but I'd still say the Performance mode is very usable given it's performance advantage.

When examining the 1440p Quality mode, once again this is basically a tie. It's a more exaggerated version of what we saw at 4K using the Quality mode, I had DLSS looking a bit softer but a bit more stable; realistically they are both very close, in which case you'd just use DLSS as it delivers more performance

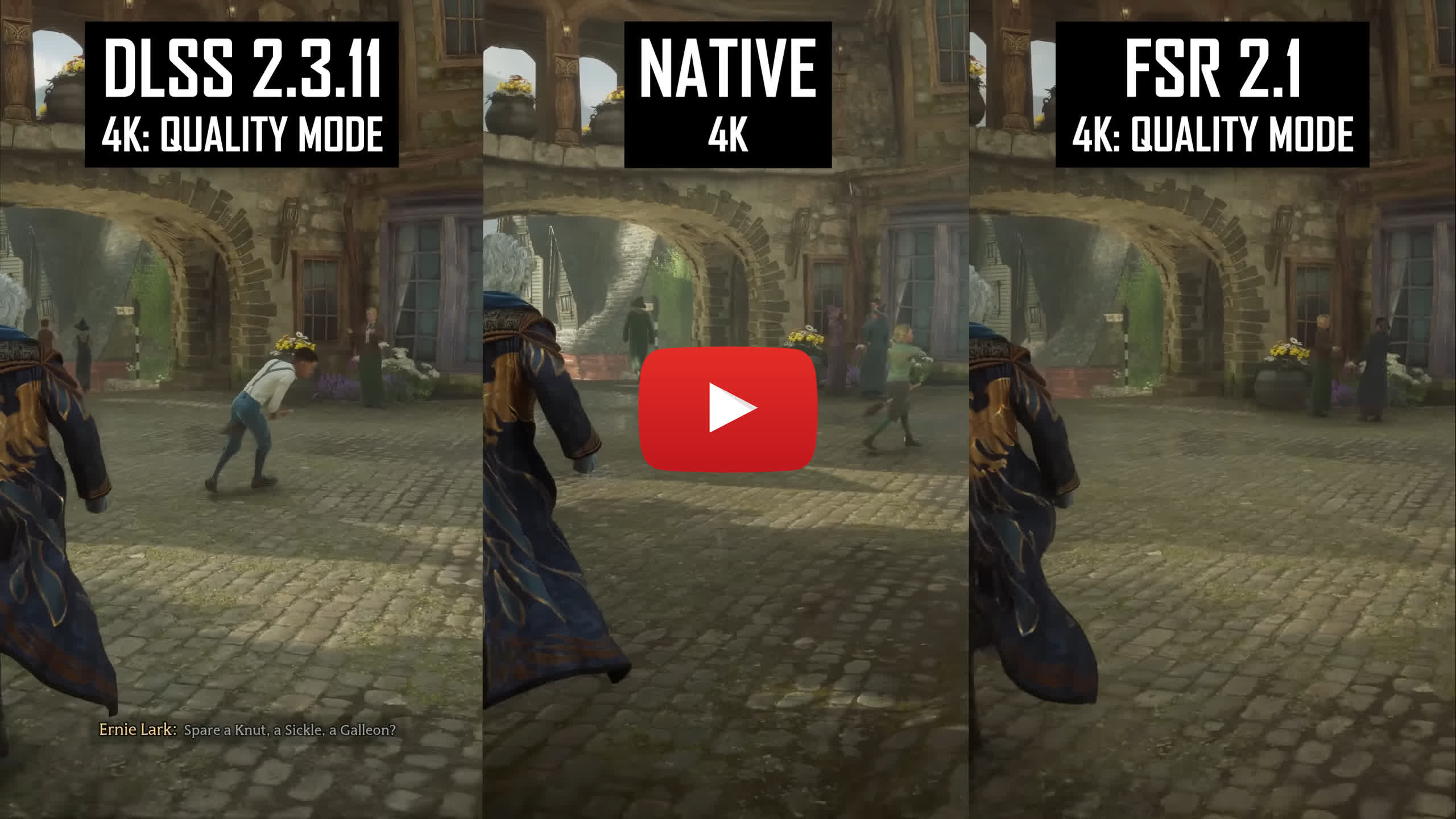

Hogwarts Legacy

Hogwarts Legacy is a game that I feel looks slightly better using the DLSS Quality mode versus native rendering at 4K. Like what we saw comparing DLSS vs FSR, the main difference here is in foliage rendering. DLSS is slightly more stable with a small advantage in shimmering and flickering.

Native rendering isn't a huge offender for foliage flickering but it does do it to a slightly greater degree than DLSS. Native is clearly superior to FSR though, which rendered foliage at an obviously reduced quality level.

Click on the video to jump to the scene described on this section.

I found it quite difficult to spot any differences between native rendering and the 4K performance mode for DLSS. Under these conditions I'd say the two are equivalent which is impressive for Nvidia's upscaling, though less impressive for the game's built-in anti-aliasing solution which when rendering natively does have some stability issues.

Then when we check back to 1440p Quality mode upscaling versus native 1440p, I'd again say that DLSS is slightly better here with that small increase in image stability

God of War

I wanted to show God of War in the scene below for one specific reason, and that's how native vs upscaling can differ drastically between different configurations. For example, using the 4K Quality mode for DLSS and FSR, versus native 4K, I'd say that DLSS looks quite similar to native rendering. There are areas where it trades blows but overall, pretty similar image quality and I'd certainly be very happy using DLSS knowing I was getting a great experience.

Click on the video to jump to the scene described on this section.

But then switching to the 4K Performance mode for DLSS, look at the horrible foliage flickering in the background for DLSS, it's pretty distracting and looks absolutely terrible.

And while this is more of an issue with cutscenes than gameplay sections, I'd much rather be playing with native rendering, or the DLSS Quality mode.

Hitman 3

Hitman 3 is a great showing for DLSS Quality mode at 4K compared to Native rendering. In a title that's more slow paced like this, DLSS has a clear advantage over native rendering in terms of image stability, areas like the overhead grates flicker much less in the DLSS image, reflections also flicker less, and there's more rain being reconstructed here than even the native presentation.

While I think the game looks a bit oversharpened using DLSS versus native rendering and FSR 2, the superior stability gives it a moderate advantage and does indeed produce better than native rendering...

Click on the video to jump to the scene described on this section.

Using the 4K Performance mode it's more of a tie, which in itself is still a great result for DLSS given it's rendering at a much lower resolution. Image stability is less impressive, and there are areas where it's noticeable that the render resolution has been reduced, such as in reflections which are noticeably worse than native rendering. However I still think DLSS's performance mode has better stability than native rendering and better fine detail reconstruction, hence the tie.

Then when looking at 1440p, it's a mixture of what we saw using the 4K Quality and Performance modes. Generally, DLSS is again more stable in its presentation and is better at reconstructing fine details, in fact there are some areas where even FSR 2.1 delivers better image quality, though quite a bit inferior to DLSS. I think this is indicative of less than stellar native image quality, particularly its TAA which seems to be quite unstable relative to cutting edge upscalers.

Death Stranding

But oh boy, Hitman 3 is far from the worst in terms of native image quality. That goes to Death Stranding Director's Cut, which looks absolutely terrible in its native presentation compared to DLSS and even FSR. Huge amounts of shimmering, flickering and image instability in the scene shown, it's night and day compared to DLSS, which is clearly the best way to play the game.

Click on the video to jump to the scene described on this section.

This is the only example I found where DLSS is significantly better than native, but it does provide proof that it is possible for DLSS to be much better than native to the point where native is almost unusable it flickers so much. And this is using the 4K Quality mode, even using 4K Performance we'd say DLSS has a noticeable advantage in terms of image stability, which is wild given the difference in render resolution.

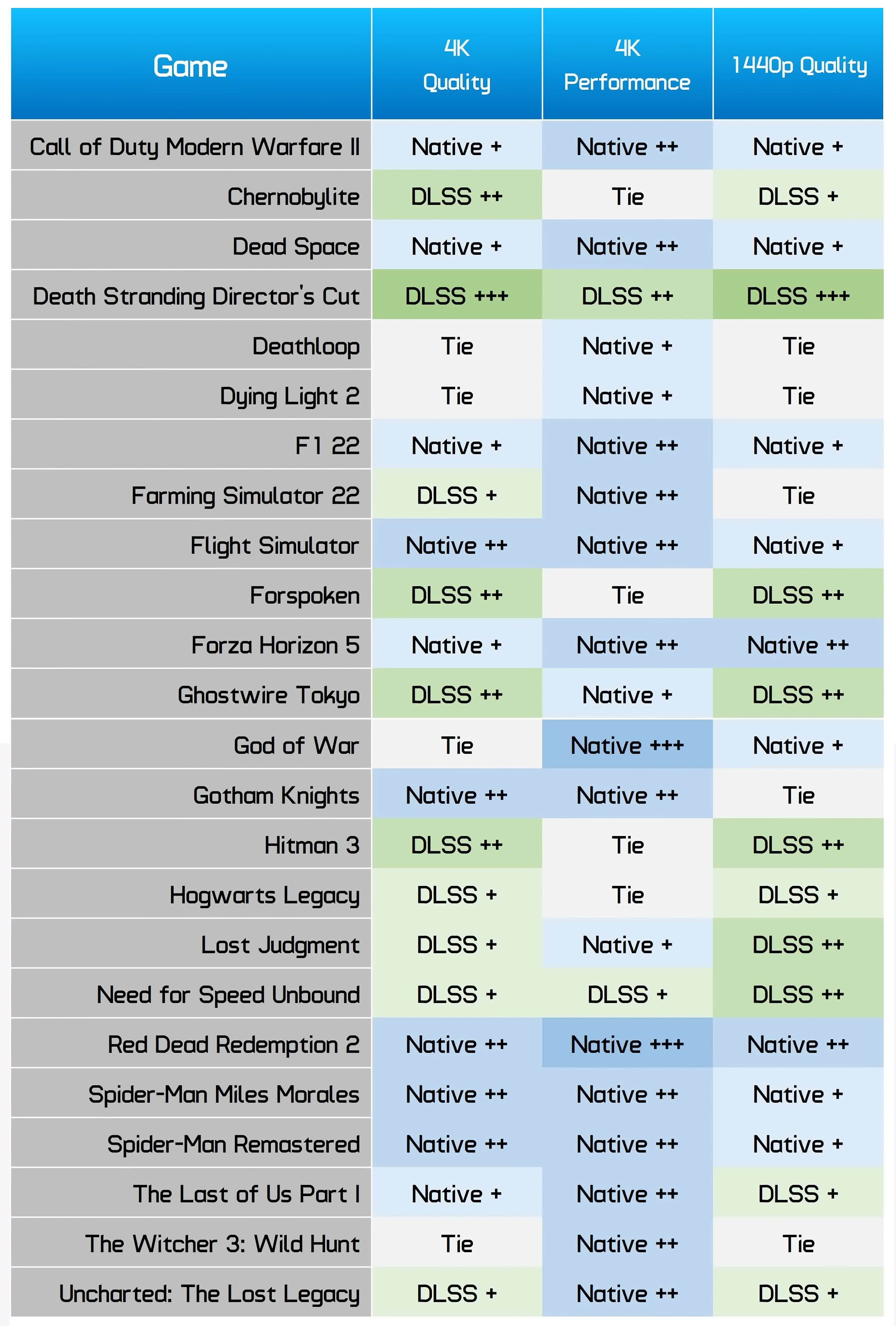

Native Resolution vs Upscaling: 24 Game Summary

Now it's time to look across all 24 games to see where DLSS vs FSR vs Native image quality ends up overall.

Each configuration could be awarded one of 10 grades. A + sign beside one of the configurations indicates that method of rendering was slightly better, so DLSS + means DLSS was the best configuration, and slightly better than the other two methods. Two pluses means moderately better, and three pluses means significantly better. A tie is also possible between one or more of the rendering techniques.

However as we discovered in our DLSS vs FSR review, DLSS was always able to at least tie with FSR in any given configuration, so realistically this grading is a simple DLSS vs Native battle, as FSR can be removed from contention. You can go back and look at whether native or FSR is in third place, there are a few situations where FSR is able to deliver better image quality than native rendering, but for the most part, it's either DLSS or Native that we're most interested in.

Across the 24 games it's very much a mixed bag in the configurations we analyzed. Whereas with DLSS vs FSR it was a clear victory for DLSS in almost every scenario, the same can't be said for DLSS vs Native rendering. There are situations where DLSS is able to deliver better than native image quality, but there are other times where native is clearly superior. It really depends on the game, its upscaling implementation, and the native image quality and implementation of features like TAA.

At 4K using Quality upscaling, it's almost a 50/50 split between whether DLSS or Native rendering is superior. I concluded that native was moderately better in 5 games, and slightly better in another 5 games. DLSS was slightly better in 5 games, moderately better in 4 games, and significantly better in 1 game. There were also 4 ties. This is a near exact 50/50 split with 10 wins for native rendering and 10 wins for DLSS.

At 1440p using Quality upscaling it was a similar story. Native was moderately better in 2 games and slightly better in 7 games. DLSS was moderately better in 5 games, slightly better in 4 games and significantly better in 1 game, plus there were 5 ties. This gives DLSS a small edge over native rendering at 1440p, though not by a significant amount and again there is quite a bit of variance.

What is clear though is that native rendering is superior to using the 4K Performance DLSS mode, and this why we typically don't recommend using it. With native rendering being at least moderately better in 14 of 24 games, and in general native was superior 75% of the time, it's clear that the Performance mode even in the best case scenario at 4K is reducing visual quality to achieve higher levels of performance.

What We Learned

After all that analysis, is it accurate to say that DLSS upscaling delivers better than native image quality? We think it depends, and as a blanket statement or generalization we wouldn't say DLSS is better than native. There are plenty of games where native image quality is noticeably better, and this is especially true when using lower quality DLSS settings like the Performance mode, which rarely delivers image quality on-par with native rendering.

However, it is true that DLSS can produce a better than native image, it just depends on the game. At 4K using the Quality mode, for example, we'd say that around 40% of the time DLSS was indeed producing a better than native image. Often this comes down to the native image, on a handful of occasions native rendering wasn't great, likely due to a poor TAA implementation. But on other occasions the native image looks pretty good, it's just that DLSS looks better or has an advantage at times.

We also think it's quite rare that native rendering would be preferred over DLSS when factoring in the performance advantage that DLSS provides. This can depend on the level of performance you're getting, for example with an RTX 4090 there are some games that run so well the performance uplift from DLSS is not very useful.

There are also occasions you'll be CPU limited, in which case DLSS won't provide much of a performance benefit. But generally, even when native rendering delivers better image quality, it isn't to the degree where worse performance is justified. At least to me, and I know this can be quite subjective, there were only a few instances where the artifacts using DLSS outweighed the performance benefit.

It's also true that at times, FSR 2 is capable of better than native rendering, but these instances are much rarer than with DLSS. A lot of the time, the artifacts we see in a game's native presentation are more pronounced when using FSR, like flickering, shimmering or issues with fine detail reconstruction. DLSS does a much better job of fixing issues with native rendering compared to FSR – though there are times when FSR looks great and can be very usable. In Death Stranding, for example, we'd far prefer to use FSR compared to native rendering.

While DLSS can look better than native rendering at times, we suspect this would be the case in more titles were game developers more proactive about updating the DLSS versions used with their games.

Take Red Dead Redemption 2 as an example, which currently uses DLSS 2.2.10, an older version of DLSS that ends up allowing native rendering to look moderately better. If that game was using the very latest version of DLSS instead (3.1.11 as of writing), it would be a completely different story. After manually replacing the the DLSS DLL file, the presentation is a lot better, ultimately delivering image quality equivalent to native or even better at times.

Yes, replacing the DLL file is a neat trick that enthusiast gamers and modders can do, but it's not something that mainstream PC gamers are doing – similar to how most PC gamers don't overclock or undervolt their system. Developers should be more proactive about using the latest versions of DLSS, especially as there are lots of games receiving active updates that don't upgrade the DLSS DLL, and plenty more that launch with outdated versions of the technology.

It's not even that complicated of an upgrade, and it can often solve obvious visual issues. The same can be said for FSR, while it's not currently possible to easily update the FSR version yourself, there are plenty of games currently running old versions or launching with old versions of the technology.

Nvidia have made it easier to do this with newer versions of DLSS and their wrapper framework Streamline, allowing developers to opt-in to automatic DLSS updates. We'd say it's essential for developers to use this feature, so that the best image quality is delivered to gamers with no intervention on their part.